Top 60+ Software Testing Interview Questions & Answers 2025 [Free Template]

Learn with AI

Preparing for a software testing interview can feel overwhelming, but the right preparation helps you walk in confident. This guide gives you 60+ essential questions and answers, from foundational concepts to advanced topics, so you're ready for anything.

Our questions are categorized into three levels:

- Beginner level

- Intermediate level

- Advanced level

At the end, you'll find helpful tips, strategies, and additional resources to handle tricky questions, along with personal interview questions you can prepare for.

Good luck with your interview!

Beginners Level Software Testing Interview Questions and Answers For Freshers

1. What is software testing?

Software testing checks whether software works as expected and is free of defects before release. For example, in functional testing, testers verify whether the login feature behaves correctly with both valid and invalid credentials.

Testing may be done manually or using automated test scripts. The goal is to ensure the software meets business requirements and to uncover issues early.

There are two main testing approaches:

- Manual Testing: testers execute test cases without automation tools.

- Automation Testing: testers use tools or scripts to execute tests, focusing more on planning and test design.

2. Why is software testing important in the software development process?

Quality is not only the absence of bugs — it means meeting or exceeding user expectations. Software testing ensures:

- Consistent software quality is maintained.

- It improves the user experience and identifies areas for optimization.

Read More: What is Software Testing? Definition, Guide, Tools

3. Explain the Software Testing Life Cycle (STLC)

The Software Testing Life Cycle (STLC) is a structured process followed by QA teams to ensure thorough coverage and efficient testing.

There are six stages in the STLC:

- Requirement Analysis: understanding functional and non-functional requirements; creating the RTM.

- Test Planning: defining objectives, scope, environment, risks, and schedule.

- Test Case Development: writing manual cases or automation scripts.

- Environment Setup: preparing hardware, software, and network configuration.

- Test Execution: executing test cases, logging defects, and retesting fixes.

- Test Cycle Closure: analysing results, identifying gaps, and documenting improvements.

4. What is the purpose of test data? How do you create an effective test data set?

Test data is used to simulate real user input when no production data exists — for example, login scenarios requiring usernames and passwords.

Good test data should meet these criteria:

- Data Relevance: represents real user behavior.

- Data Diversity: includes valid, invalid, boundary, and special cases.

- Data Completeness: covers all required fields.

- Data Size: uses appropriate dataset size (small or large).

- Data Security: avoids sensitive/confidential information.

- Data Independence: does not affect results of other tests.

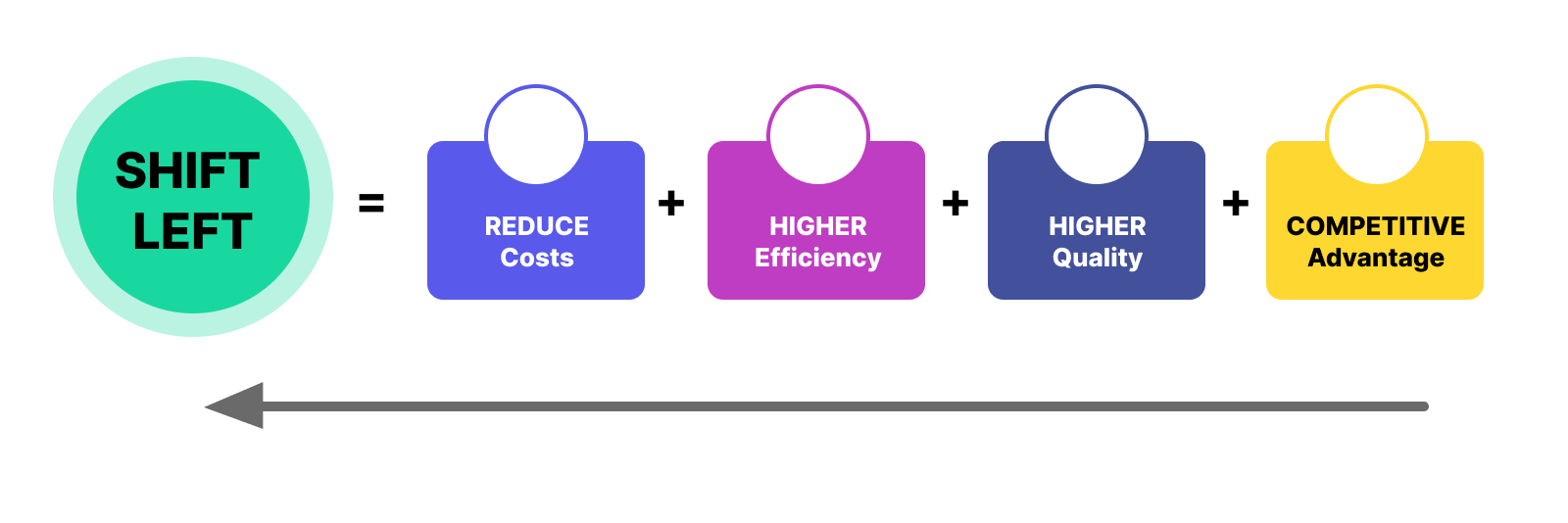

5. What is shift left testing? How is it different from shift right testing?

Shift Left Testing moves testing earlier in the development cycle, reducing cost and catching defects sooner.

Shift Right Testing occurs after release, using real user behavior to guide quality improvements and feature planning.

Below is a comparison table for Shift Left vs Shift Right testing:

| Aspect | Shift Left Testing | Shift Right Testing |

|---|---|---|

| Testing Initiation | Starts testing early in the development process | Starts testing after development and deployment |

| Objective | Early defect detection and prevention | Finding issues in production and real-world scenarios |

| Testing Activities | Static testing, unit testing, continuous integration testing | Exploratory testing, usability testing, monitoring, feedback analysis |

| Collaboration | Collaboration between developers and testers from the beginning | Collaboration with operations and customer support teams |

| Defect Discovery | Early detection and resolution of defects | Detection of defects in production environments and live usage |

| Time and Cost Impact | Reduces overall development time and cost | May increase cost due to issues discovered in production |

| Time-to-Market | Faster delivery due to early defect detection | May impact time-to-market due to post-production issues |

| Test Automation | Significant reliance on test automation for early testing | Automation used for monitoring and continuous feedback |

| Agile and DevOps Fit | Aligned with Agile and DevOps methodologies | Complements DevOps by focusing on production environments |

| Feedback Loop | Continuous feedback throughout SDLC | Continuous feedback from real users and operations |

| Risks and Benefits | Reduces risks of major defects reaching production | Identifies issues not apparent during development |

| Continuous Improvement | Improves quality based on early feedback | Improves quality based on real-world usage |

6. Explain the difference between functional testing and non-functional testing.

| Aspect | Functional Testing | Non-Functional Testing |

|---|---|---|

| Definition | Focuses on verifying the application's functionality | Assesses aspects not directly related to functionality (performance, security, usability, scalability, etc.) |

| Objective | Ensure the application works as intended | Evaluate non-functional attributes of the application |

| Types of Testing | Unit testing, integration testing, system testing, acceptance testing | Performance testing, security testing, usability testing, etc. |

| Examples | Verifying login functionality, checking search filters, etc. | Assessing system performance, security against unauthorized access, etc. |

| Timing | Performed at various stages of development | Often executed after functional testing |

7. What is the purpose of test cases and test scenarios?

A test case is a specific set of conditions and inputs executed to validate a particular aspect of the software functionality.

A test scenario is a broader concept representing the real-world situation being tested. It groups multiple related test cases to verify overall behavior.

If you’re unsure where to begin, here are popular sample test cases that provide a solid starting point:

- Test Cases For API Testing

- Test Cases For Login Page

- Test Cases For Registration Page

- Test Cases For Banking Application

- Test Cases For E-commerce Website

- Test Cases For Search Functionality

8. What is a defect, and how do you report it effectively?

A defect is a flaw in a software application causing it to behave in an unintended way. They are also called bugs and are typically used interchangeably.

To report a defect effectively:

- Reproduce the issue consistently and document clear steps.

- Use defect tracking tools like Jira, Bugzilla, or GitHub Issues.

- Provide a clear, descriptive title.

- Include key details (environment, steps, expected vs. actual results, severity, frequency, etc.).

- Add screenshots or recordings if needed.

9. Explain the Bug Life Cycle

The defect/bug life cycle includes the steps followed when identifying, addressing, and resolving software issues. Two common ways to describe it are: by workflow and by status.

The bug life cycle follows these steps:

- Testers execute tests.

- Testers report new bugs and set the status to New.

- Leads/managers review bugs and assign developers (In Progress / Under Investigation).

- Developers investigate and reproduce the bug.

- Developers fix the bug or request more details if needed.

- Testers provide additional information if requested.

- Testers verify the fix.

- Testers close the bug, or re-open it with more details if the issue persists.

10. How do you categorize defects?

Defects are categorized to streamline management, analysis, and troubleshooting. Common categories include:

- Severity (High, Medium, Low)

- Priority (High, Medium, Low)

- Reproducibility (Reproducible, Intermittent, Non-Reproducible)

- Root Cause (Coding Error, Design Flaw, Configuration Issue, User Error)

- Bug Type (Functional, Performance, Usability, Security, Compatibility, etc.)

- Area of Impact

- Frequency of Occurrence

Read More: How To Find Bugs on Websites

11. What is the difference between manual testing and automated testing?

Automated testing is ideal for large projects with many repetitive tests. It ensures consistency, speed, and reliability.

Manual testing is suitable for smaller tasks, exploratory testing, and scenarios requiring human intuition and creativity.

Automation can be overkill for small projects, so the choice depends on scope, timeline, and available resources.

Read More: Manual Testing vs Automation Testing

12. Define "test plan" and describe its components.

A test plan is a guiding document that outlines the strategy, scope, resources, objectives, and timelines for testing a software system. It ensures alignment, clarity, and consistency throughout the testing process.

13. What is regression testing? Why automate it?

Regression testing is performed after code updates to verify that existing functionality still works correctly.

As the system grows, regression suites become large. Manual execution becomes slow and impractical. Automated testing provides:

- Fast execution

- Higher accuracy

- Reduced human error

- Increased test coverage

- Rapid feedback for CI/CD

14. What are the advantages and disadvantages of automated testing tools?

Advantages:

- Faster test execution

- Improved accuracy

- Reusable test scripts

- High scalability

- Supports continuous testing

Disadvantages:

- High initial cost

- Requires ongoing maintenance

- Cannot detect UX or visual issues

- Needs skilled resources

- Not ideal for ad-hoc or exploratory testing

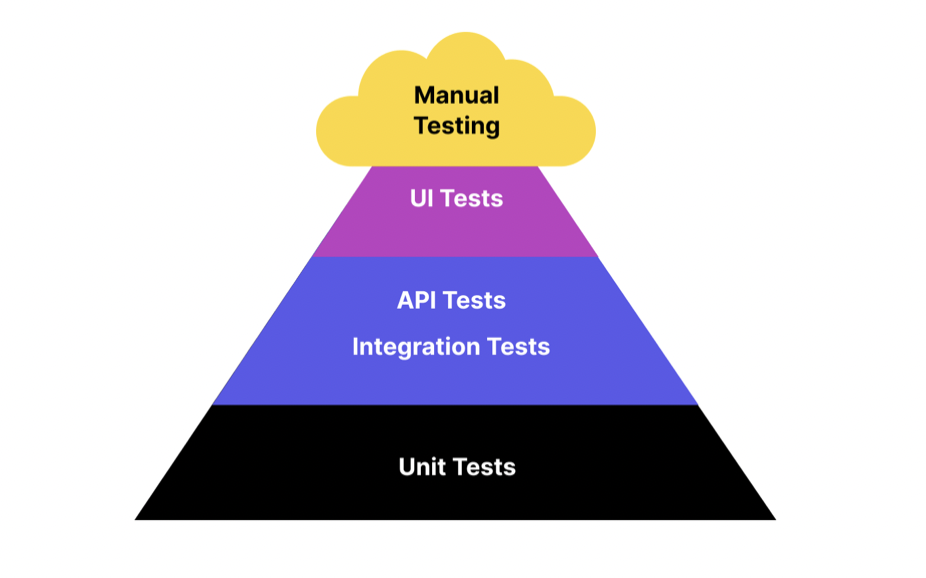

15. Explain the Test Pyramid

The test pyramid is a testing strategy that illustrates how different automated test types should be distributed based on scope and complexity. It consists of three layers: unit tests at the base, service-level tests in the middle, and UI/End-to-End (E2E) tests at the top.

- Unit Testing: Forms the base of the pyramid. Focuses on individual software components to ensure each part works correctly in isolation. These tests are fast and essential for early defect detection.

- Service Level Testing: The middle layer. Validates interactions between integrated components, including API testing, contract testing, and integration testing. Ensures different modules communicate as expected.

- End-to-End (E2E) Testing: The top layer. Confirms that the complete system functions correctly from a user's perspective. E2E tests are fewer due to their complexity, cost, and execution time.

16. Describe the differences between black-box testing, white-box testing, and gray-box testing.

- Definition: Testing without any knowledge of the internal code or structure.

- Focus: Validates outputs based on inputs.

- Example: UI testing, user acceptance testing (UAT).

- Used By: QA testers, end-users.

- Definition: Testing with full visibility of code, logic, and structure.

- Focus: Ensures internal operations and logic execute correctly.

- Example: Unit tests, code coverage checks.

- Used By: Developers.

Gray-Box Testing:

- Definition: Combines black-box and white-box testing; testers have partial knowledge of internal components.

- Focus: Validates functionality and internal behaviors like APIs or database operations.

- Example: Penetration testing, integration testing.

- Used By: QA engineers, security testers.

17. How do you prioritize test cases?

Test case prioritization ensures critical areas are validated early, aligns testing with project risks, and optimizes the use of time and resources. Common prioritization strategies include:

- Risk-Based: Focus on high-risk or business-critical areas.

- Functional Importance: Test core features first.

- Frequency of Use: Prioritize functionality used most often.

- Integration Points: Validate key interactions between components.

- Performance Sensitivity: Prioritize features impacted by load or traffic.

- Security Impact: Address high-risk security functions early.

- Stakeholder Priorities: Incorporate input from product owners, PMs, or users.

18. What is the purpose of the traceability matrix in software testing?

A traceability matrix is a key document used to ensure full test coverage by linking requirements with test cases and other related artifacts.

Purpose:

- Tracks the relationship between requirements, test cases, and other artifacts.

- Ensures that all requirements are validated by corresponding test cases.

- Helps identify gaps in test coverage.

- Maps what needs to be tested to how it is tested.

- Ensures requirement changes are reflected in test cases.

19. What is exploratory testing? Is it different from ad-hoc testing?

Exploratory testing is an unscripted manual testing approach where testers evaluate the application without predefined test cases. They rely on curiosity, experience, and spontaneous decision-making to discover issues and understand system behavior.

Exploratory testing and ad-hoc testing share similarities, but they differ in structure and intent. The table below highlights their differences.

| Aspect | Exploratory Testing | Ad Hoc Testing |

|---|---|---|

| Approach | Systematic and structured | Unplanned and unstructured |

| Planning | Tests designed and executed on the fly using tester knowledge | Performed without predefined test plans or cases |

| Test Execution | Design, execution, and learning occur simultaneously | Testing happens without structured steps |

| Purpose | Explore software and uncover deeper insights | Quick, informal checks |

| Documentation | Notes and observations recorded during testing | Little or no documentation |

| Test Case Creation | May be created on the fly | No predefined test cases |

| Skill Requirement | Requires skilled and experienced testers | Can be done by any team member |

| Reproducibility | Possible to reproduce steps afterward | Often difficult to reproduce bugs |

| Test Coverage | Can cover specific areas or discover new paths | Coverage depends heavily on tester knowledge |

| Flexibility | Adapts to discoveries during testing | Fully flexible, intuition-driven |

| Intentional Testing | Still focuses on meaningful testing goals | More unstructured and less purposeful |

| Maturity | Recognized, evolving methodology | Considered less formal or mature |

20. Explain the concept of CI/CD

CI/CD stands for Continuous Integration and Continuous Delivery (or Continuous Deployment). It is a set of practices designed to automate and streamline building, testing, and delivering software. The goal is to enable fast, reliable, and frequent updates while maintaining high quality.

-

Continuous Integration (CI):

- Developers frequently commit code to a shared repository.

- Each commit triggers automated builds and tests.

- Ensures new code integrates well with the existing codebase.

- Helps catch bugs early.

Continuous Delivery (CD):

- Automates the release pipeline.

- Code that passes CI is deployed automatically to a staging environment.

- Reduces manual errors in releases.

- Keeps software consistently ready for deployment.

Intermediate Level Software Testing Interview Questions and Answers

21. Explain the differences between static testing and dynamic testing. Provide examples of each.

Static Testing:

- Analyzes code or documents without executing the program.

- Identifies errors through reviews and inspections.

- Examples:

- Code reviews

- Inspections

- Walkthroughs

Dynamic Testing:

- Executes the software to validate functionality.

- Finds defects during runtime.

- Examples:

- Unit testing

- Integration testing

- System testing

22. What is the V-model in software testing? How does it differ from the traditional waterfall model?

The V-model aligns testing activities directly with development phases, forming a “V” shape. Unlike the traditional waterfall model—where testing occurs after development—the V-model integrates testing early, enabling faster feedback and earlier defect detection.

23. Describe the concept of test-driven development (TDD) and how it influences the testing process.

TDD is a development approach where tests are written before the actual code. Developers create automated unit tests to define expected behavior, then write code to satisfy those tests. TDD encourages cleaner design, strong test coverage, and early defect detection.

Read More: TDD vs BDD: A Comparison

24. Discuss the importance of test environment management and the challenges involved in setting up test environments.

Test environment management ensures consistent, controlled environments for executing test cases. It allows QA teams to test safely outside production while reproducing issues reliably.

- Execute tests without affecting production.

- Maintain consistent, reproducible testing conditions.

- Simulate production-like environments for realistic results.

- Create various configurations (OS, browsers, devices).

Challenges in managing test environments include:

- Limited access to shared environments.

- Complex environment setup requiring technical expertise.

- Managing test data securely while maintaining integrity.

- Investing in hardware to match production environments.

Read More: How To Build a Good Test Infrastructure?

25. What are the different types of test design techniques? When would you use these types of test design techniques?

Test design techniques help derive test cases from requirements or scenarios.

1. Equivalence Partitioning

- Groups input data into partitions that behave similarly.

- Tests one representative value per partition.

- Example: For valid inputs 1–100, partitions include valid (1–100) and invalid (<1 or >100).

2. Boundary Value Analysis (BVA)

- Tests edge values where bugs often appear.

- Example: For range 1–100, test 0, 1, 100, 101.

3. Decision Table Testing

- Useful where different input combinations affect outcomes.

- Represents conditions and expected actions in a table.

4. State Transition Testing

- Applies to systems whose behavior depends on current state.

- Example: ATM flow — card inserted → enter PIN → select option.

5. Exploratory Testing

- Unscripted exploration to uncover defects.

6. Error Guessing

- Uses tester experience to predict likely defect areas.

26. Explain the concept of test data management and its significance in software testing.

Test data management (TDM) involves creating, maintaining, and controlling test data throughout the testing lifecycle.

Its goal is to ensure testers always have relevant, accurate, and realistic data to perform high-quality testing.

27. What are the common challenges in mobile app testing?

- Device Fragmentation: Many devices with varying specs and OS versions.

- OS & Platform Versions: Compatibility issues across old and new versions.

- Network Conditions: Performance varies across Wi-Fi, 3G, 4G, 5G.

- App Store Approval: Strict review guidelines slow releases.

- Interrupt Testing: Handling calls, messages, pop-ups, and low battery events.

- Limited Resources: Mobile devices have constrained CPU, memory, and battery.

28. Explain the concept of test automation framework. Examples of some test automation frameworks.

A test automation framework provides structure, reusability, and best practices for designing and executing automated tests.

- Selenium WebDriver: Open-source web testing framework supporting multiple languages.

- TestNG: Java test framework for configuration, parallel runs, and reporting.

- JUnit: Commonly used Java unit testing framework.

- Cucumber: BDD framework for writing human-readable test scenarios.

- Robot Framework: Keyword-driven framework supporting web, mobile, and desktop apps.

- Appium: Mobile test automation for Android and iOS.

Read More: Top 8 Cross-browser Testing Tools For Your QA Team

29. How would you choose the right framework for a project?

Several criteria to consider when choosing a test automation framework for your project include:

- Project Requirements: Assess the application's complexity, supported technologies, and the types of tests needed (functional, regression, performance, etc.).

- Team Expertise: Choose a framework that matches the team’s skillset and allows them to work efficiently.

- Scalability and Reusability: Prefer frameworks that support scalable design and encourage reusable components.

- Tool Integration: Ensure the framework integrates well with your automation tools and technology stack.

- Maintenance Effort: Consider how easy it is to maintain scripts and framework components over time.

- Community Support: Check for active community involvement and reliable support resources.

- Reporting and Logging: Verify that the framework provides robust reporting and logging for debugging and analysis.

- Flexibility and Customization: Choose frameworks that can be adapted to evolving project needs.

- Proof of Concept (POC): Run a small POC to validate whether the framework fits your project requirements.

Read More: Test Automation Framework – 6 Common Types

30. How to test third-party integrations?

Since third-party integrations may use different technologies than the system under test, conflicts can occur. Testing these integrations follows a process similar to the Software Testing Life Cycle:

- Understand the integration thoroughly — including functionality, APIs, data formats, and limitations. Collaborate with development and integration teams to gather details.

- Set up a dedicated test environment that mirrors production as closely as possible. Ensure all APIs and third-party systems are accessible and configured correctly.

- Perform integration testing to confirm the application interacts correctly with third-party systems. Test different scenarios, data flows, and error handling.

- Validate data mappings between the system and the third-party service.

- Test boundary conditions and error scenarios during data exchange to verify system resilience.

31. What are different categories of debugging?

- Static Debugging: Analyzing code without executing it.

- Dynamic Debugging: Analyzing the program while it runs.

- Reactive Debugging: Debugging after an issue has been observed, usually following a failure in testing or in production.

- Proactive Debugging: Identifying and preventing potential issues before they occur.

- Collaborative Debugging: Multiple engineers working together to resolve complex issues.

32. Explain the concept of data-driven testing.

Data-driven testing is a testing approach in which test cases are executed with multiple sets of test data. Instead of writing separate test cases for each data variation, testers parameterize test cases and run them with different input values stored in external sources such as spreadsheets or databases.

33. Discuss the advantages and disadvantages of open-source testing tools in a project.

| Advantages | Disadvantages |

| Free to use, no license fees | Limited support |

| Active communities provide assistance | Steep learning curve |

| Can be tailored to project needs | Lack of comprehensive documentation |

| Source code is accessible for modification | Integration challenges |

| Frequent updates and improvements | Occasional bugs or issues |

| Not tied to a specific vendor | Requires careful consideration of security |

| Large user base, abundant online resources | May not offer certain enterprise-level capabilities |

Read More: Top 10 Free Open-source Testing Tools, Frameworks, and Libraries

34. Explain the concept of model-based testing. What is the process of model-based testing?

Model-Based Testing (MBT) is a technique that uses models to represent system behavior and generate test cases based on those models. These models may be finite state machines, decision tables, flowcharts, or other structures capturing functionality, states, and transitions.

The process includes:

- Model Creation: Build a model that abstracts the behavior of the system under test, including states, actions, and transitions.

- Test Case Generation: Automatically or semi-automatically generate test cases based on the model.

- Test Execution: Run the generated test cases on the system.

- Result Analysis: Compare actual vs expected behavior and report discrepancies as defects.

35. What is TestNG?

TestNG (Test Next Generation) is a Java testing framework inspired by JUnit but offering more advanced features. It supports unit, integration, and end-to-end testing, providing flexible configuration, annotations, parallel execution, data-driven testing, and reporting.

36. Describe the role of the Page Object Model (POM) in test automation.

The Page Object Model (POM) is a design pattern that structures automation code by representing each page or UI component as a class. This class contains locators and methods for interactions. POM improves maintainability, reusability, readability, and reduces code duplication.

37. Explain the concept of abstraction layers in a test automation framework. How do they promote scalability and reduce code duplication?

Abstraction layers organize the framework into modular components that encapsulate complexity. Each layer handles a specific responsibility, enabling cleaner structure, easier maintenance, and scalability.

Common abstraction layers include:

- UI Layer

- Business Logic Layer

- API Layer

- Data Layer

- Utility Layer

38. Explain the concept of parallel test execution. How do you implement parallel testing to optimize test execution time?

Parallel test execution involves running multiple test cases simultaneously on different threads or machines. This significantly reduces execution time, speeds up feedback, and improves coverage.

Key benefits:

- Reduced execution time

- Faster feedback

- Improved test coverage

- Better resource utilization

- Higher productivity

39. Compare Selenium vs Katalon

| Category | Katalon | Selenium |

| Initial setup and prerequisites |

|

|

| License Type | Commercial | Open-source |

| Supported application types | Web, mobile, API, desktop | Web |

| What to maintain | Test scripts |

|

| Language Support | Java/Groovy | Java, Ruby, C#, PHP, JavaScript, Python, Perl, Objective-C, etc. |

| Pricing | Free Forever plan + paid tiers | Free |

| Knowledge Base & Community Support |

|

Community support |

Read More: Katalon vs Selenium

40. Compare Selenium vs TestNG

| Aspect | Selenium | TestNG |

|---|---|---|

| Purpose | Suite of tools for web application testing | Testing framework for test organization & execution |

| Functionality | Automation of web browsers and web elements | Test configuration, parallel execution, grouping, data-driven testing, reporting, etc. |

| Browser Support | Supports multiple browsers | N/A |

| Limitations | Primarily focused on web application testing | N/A |

| Parallel Execution | N/A | Supports parallel execution at method, class, suite, and group levels |

| Test Configuration | N/A | Uses annotations for setup and teardown of test environments |

| Reporting & Logging | N/A | Provides detailed execution reports and supports custom listeners |

| Integration | Often paired with TestNG for test management | Commonly combined with Selenium for execution, configuration, and reporting |

Advanced Level Software Testing Interview Questions and Answers For Experienced Testers

41. How to develop a good test strategy?

When creating a test strategy document, we can make a table containing the listed items. Then, have a brainstorming session with key stakeholders (project manager, business analyst, QA Lead, and Development Team Lead) to gather the necessary information for each item. Here are some questions to ask:

Test Goals/Objectives:

- What are the specific goals and objectives of the testing effort?

- Which functionalities or features should be tested?

- Are there any performance or usability targets to achieve?

- How will the success of the testing effort be measured?

Sprint Timelines:

- What is the duration of each sprint?

- When does each sprint start and end?

- Are there any milestones or deadlines within each sprint?

- How will the testing activities be aligned with the sprint timelines?

Lifecycle of Tasks/Tickets:

- What is the process for capturing and tracking tasks or tickets?

- How will tasks or tickets flow through different stages (e.g., new, in progress, resolved)?

- Who is responsible for assigning, updating, and closing tasks or tickets?

- Is there a specific tool or system used for managing tasks or tickets?

Test Approach:

- Will it be manual testing, automated testing, or a combination of both?

- How will the test approach align with the development process (e.g., Agile, Waterfall)?

Testing Types:

- What types of testing will be performed (e.g., functional testing, performance testing, security testing)?

- Are there any specific criteria or standards for each testing type?

- How will each testing type be prioritized and scheduled?

- Are there any dependencies for certain testing types?

Roles and Responsibilities:

- What are the different roles involved in the testing process?

- What are the responsibilities of each role?

Testing Tools:

- What are the preferred testing tools for different testing activities (open source/vendor-based)?

- Are there any specific criteria for selecting testing tools?

- How will the testing tools be integrated into the overall testing process?

- Is there a plan for training and support in effectively using the testing tools?

42. How to manage changes in testing requirements?

- Have a backup plan in case changes are needed in the test plan.

- Communicate with stakeholders (project managers, developers, business analysts) to explain:

- Why changes are needed.

- What the objectives are.

- How it will affect the project.

- Update the test plan to reflect the changes.

- Adjust test artifacts (test cases, scripts, reports) as needed.

- Continue the test cycle based on the updated plan.

43. What are some key metrics to measure testing success?

Several important test metrics include:

- Test Coverage: the extent to which the software has been tested with respect to specific criteria

- Defect Density: the number of defects (bugs) found in a specific software component or module, divided by the size or complexity of that component

- Defect Removal Efficiency (DRE): the ratio of defects found and fixed during testing to the total number of defects found throughout the entire development lifecycle. A higher DRE value indicates that testing is effective in catching and fixing defects early in the development process

- Test Pass Rate: the percentage of test cases that have passed successfully out of the total executed test cases. It indicates the overall success of the testing effort

- Test Automation Coverage: the percentage of test cases that have been automated

Learn More: What is a Test Report? How To Create One?

44. What is an Object Repository?

An object repository is a central storage location that holds all the information about the objects or elements of the application being tested. It is a key component of test automation frameworks and is used to store and manage the properties and attributes of user interface (UI) elements or objects.

45. Why do we need an Object Repository?

Having an Object Repository brings several benefits:

- Modularity: Test scripts can refer to objects by name or identifier stored in the repository, making them more readable and maintainable.

- Centralization: All object-related information is stored centrally in the repository, making it easier to update, maintain, and manage the objects, especially when there are changes in the application's UI.

- Reusability: Testers can reuse the same objects across multiple test scripts, promoting reusability and reducing redundancy in test automation code.

- Enhanced Collaboration: The object repository can be accessed by the entire test team, promoting collaboration and consistency in identifying and managing objects.

46. How do you ensure test case reusability and maintainability in your test suites?

There are several best practices when it comes to test case reusability and maintainability:

- Break down test cases into smaller, independent modules or functions.

- Each module should focus on testing a specific feature or functionality.

- Use a centralized object repository to store and manage object details.

- Separate object details from test scripts for easier maintenance.

- Decouple test data from test scripts using data-driven testing techniques.

- Store test data in external files (e.g., CSV, Excel, or databases) to facilitate easy updates and reusability.

- Use test automation frameworks (e.g., TestNG, JUnit, Robot Framework) to provide structure.

- Leverage libraries or utilities for common test tasks, such as logging, reporting, and data handling.

47. Write a test script using Selenium WebDriver with Java to verify the functionality of entering data in test boxes

Assumptions:

- We are testing a simple web page with two text boxes: "username" and "password".

- The website URL is "https://example.com/login".

- We are using Chrome WebDriver. Make sure to have the ChromeDriver executable available and set the system property accordingly.

import org.openqa.selenium.By; import org.openqa.selenium.WebDriver; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; public class TextBoxTest { public static void main(String[] args) { // Set ChromeDriver path System.setProperty("webdriver.chrome.driver", "path/to/chromedriver"); // Create a WebDriver instance WebDriver driver = new ChromeDriver(); // Navigate to the test page driver.get("https://example.com/login"); // Find the username and password text boxes WebElement usernameTextBox = driver.findElement(By.id("username")); WebElement passwordTextBox = driver.findElement(By.id("password")); // Test Data String validUsername = "testuser"; String validPassword = "testpass"; // Test case 1: Enter valid data into the username text box usernameTextBox.sendKeys(validUsername); String enteredUsername = usernameTextBox.getAttribute("value"); if (enteredUsername.equals(validUsername)) { System.out.println("Test case 1: Passed - Valid data entered in the username text box."); } else { System.out.println("Test case 1: Failed - Valid data not entered in the username text box."); } // Test case 2: Enter valid data into the password text box passwordTextBox.sendKeys(validPassword); String enteredPassword = passwordTextBox.getAttribute("value"); if (enteredPassword.equals(validPassword)) { System.out.println("Test case 2: Passed - Valid data entered in the password text box."); } else { System.out.println("Test case 2: Failed - Valid data not entered in the password text box."); } // Close the browser driver.quit(); } }

48. Write a test script using Selenium WebDriver with Java to verify the error message for invalid email format.

import org.openqa.selenium.By; import org.openqa.selenium.WebDriver; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; public class InvalidEmailTest { public static void main(String[] args) { // Set ChromeDriver path System.setProperty("webdriver.chrome.driver", "path/to/chromedriver"); // Create a WebDriver instance WebDriver driver = new ChromeDriver(); // Navigate to the test page driver.get("https://example.com/contact"); // Find the email input field and submit button WebElement emailField = driver.findElement(By.id("email")); WebElement submitButton = driver.findElement(By.id("submitBtn")); // Test Data - Invalid email format String invalidEmail = "invalidemail"; // Test case 1: Enter invalid email format and click submit emailField.sendKeys(invalidEmail); submitButton.click(); // Find the error message element WebElement errorMessage = driver.findElement(By.className("error-message")); // Check if the error message is displayed and contains the expected text if (errorMessage.isDisplayed() && errorMessage.getText().equals("Invalid email format")) { System.out.println("Test case 1: Passed - Error message for invalid email format is displayed."); } else { System.out.println("Test case 1: Failed - Error message for invalid email format is not displayed or incorrect."); } // Close the browser driver.quit(); } }

49. How to do usability testing?

1. Decide which part of the product/website you want to test

2. Define the hypothesis (what will users do when they land on this part of the website? How do we verify that hypothesis?)

3. Set clear criteria for the usability test session

4. Write a study plan and script

5. Find suitable participants for the test

6. Conduct your study

7. Analyze collected data

50. How do you ensure that the testing team is aligned with the development team and the product roadmap?

- Involve testing team members in project planning and product roadmap discussions from the beginning.

- Attend sprint planning meetings, product backlog refinement sessions, and other relevant meetings to understand upcoming features and changes.

- Promote regular communication between development and testing teams to share progress, updates, and challenges.

- Utilize common tools for issue tracking, project management, and test case management to foster collaboration and transparency.

- Define and track key performance indicators (KPIs) that measure the progress and quality of the project.

- Consider having developers participate in testing activities like unit testing and code reviews, and testers assist in test automation.

51. How do you ensure that test cases are comprehensive and cover all possible scenarios?

Even though it's not possible to test every possible situation, testers should go beyond the common conditions and explore other scenarios. Besides the regular tests, we should also think about unusual or unexpected situations (edge cases and negative scenarios), which involve uncommon inputs or usage patterns. By considering these cases, we can improve the coverage of your testing. Attackers often target non-standard scenarios, so testing them is essential to enhance the effectiveness of our tests.

52. What are defect triage meetings?

Defect triage meetings are an important part of the software development and testing process. They are typically held to prioritize and manage the defects (bugs) found during testing or reported by users. The primary goal of defect triage meetings is to decide which defects should be addressed first and how they should be resolved.

53. What is the average age of a defect in software testing?

The average age of a defect in software testing refers to the average amount of time a defect remains open or unresolved from the moment it is identified until it is fixed and verified. It is a crucial metric used to measure the efficiency and effectiveness of the defect resolution process in the software development lifecycle.

The average age of a defect can vary widely depending on factors such as the complexity of the software, the testing process, the size of the development team, the severity of the defects, and the overall development methodology (e.g., agile, waterfall, etc.).

54. What are some essential qualities of an experienced QA or Test Lead?

An experienced QA or Test Lead should have technical expertise, domain knowledge, leadership skills, and communication skills. An effective QA Leader is one that can inspire, motivate, and guide the testing team, keeping them focused on goals and objectives.

Read More: 9 Steps To Become a Good QA Lead

55. Can you provide an example of a particularly challenging defect you have identified and resolved in your previous projects?

There is no true answer to this question because it depends on your experience. You can follow this framework to provide the most detailed information:

Step 1: Describe the defect in detail, including how it was identified (e.g., through testing, customer feedback, etc.)

Step 2: Explain why it was particularly challenging.

Step 3: Outline the steps you took to resolve the defect

Step 4: Discuss any obstacles you faced and your rationale to overcoming it.

Step 5: Explain how you ensure that the defect was fully resolved and the impact it had on the project and stakeholders.

Step 6: Reflect on what you learned from this experience.

56. What is DevOps?

DevOps is a software development approach and culture that emphasizes collaboration, communication, and integration between software development (Dev) and IT operations (Ops) teams. It aims to streamline and automate the software delivery process, enabling organizations to deliver high-quality software faster and more reliably.

Read More: DevOps Implementation Strategy

57. What is the difference between Agile and DevOps?

Agile focuses on iterative software development and customer collaboration, while DevOps extends beyond development to address the entire software delivery process, emphasizing automation, collaboration, and continuous feedback. Agile is primarily a development methodology, while DevOps is a set of practices and cultural principles aimed at breaking down barriers between development and operations teams to accelerate the delivery of high-quality software.

58. Explain user acceptance testing (UAT).

User Acceptance Testing (UAT) is when the software application is evaluated by end-users or representatives of the intended audience to determine whether it meets the specified business requirements and is ready for production deployment. UAT is also known as End User Testing or Beta Testing. The primary goal of UAT is to ensure that the application meets user expectations and functions as intended in real-world scenarios.

59. What are entry and exit criteria?

Entry criteria are the conditions that need to be fulfilled before testing can begin. They ensure that the testing environment is prepared, and the testing team has the necessary information and resources to start testing. Entry criteria may include:

- Requirements Baseline

- Test Plan Approval

- Test Environment Readiness

- Test Data Availability

- Test Case Preparation

- Test Resources

Similarly, exit criteria are the conditions that must be met for testing to be considered complete, and the software is ready for the next phase or release. These criteria ensure that the software meets the required quality standards before moving forward, including:

- Test Case Execution

- Defect Closure

- Test Coverage

- Stability

- Performance Targets

- User Acceptance

60. How to test a pen? Explain software testing techniques in the context of testing a pen.

Software Testing Techniques

Testing a Pen

1. Functional Testing

Verify that the pen writes smoothly, ink flows consistently, and the pen cap securely covers the tip.

2. Boundary Testing

Test the pen's ink level at minimum and maximum to check behavior at the boundaries.

3. Negative Testing

Ensure the pen does not write when no ink is present and behaves correctly when the cap is missing.

4. Stress Testing

Apply excessive pressure while writing to check the pen's durability and ink leakage.

5. Compatibility Testing

Test the pen on various surfaces (paper, glass, plastic) to ensure it writes smoothly on different materials.

6. Performance Testing

Evaluate the pen's writing speed and ink flow to meet performance expectations.

7. Usability Testing

Assess the pen's grip, comfort, and ease of use to ensure it is user-friendly.

8. Reliability Testing

Test the pen under continuous writing to check its reliability during extended usage.

9. Installation Testing

Verify that multi-part pens assemble easily and securely during usage.

10. Exploratory Testing

Creatively test the pen to uncover any potential hidden defects or unique scenarios.

11. Regression Testing

Repeatedly test the pen's core functionalities after any changes, such as ink replacement or design modifications.

12. User Acceptance Testing

Have potential users evaluate the pen's writing quality and other features to ensure it meets their expectations.

13. Security Testing

Ensure the pen cap securely covers the tip, preventing ink leaks or staining.

14. Recovery Testing

Accidentally drop the pen to verify if it remains functional or breaks upon impact.

15. Compliance Testing

If applicable, test the pen against industry standards or regulations.

Recommended Readings For Your Software Testing Interview

To better prepare for your interviews, here are some topic-specific lists of interview questions:

- QA Interview Questions

- Web API Testing Interview Questions

- Mobile Testing Interview Questions

- DevOps Interview Questions

- Manual To Automation Testing Interview Questions

- 15 Questions to Evaluate Your QA Team's Software Testing Process

- Strategies to Deal A Software QA Engineer's Salary

The list above only touches mostly the theory of the QA industry. In several companies you will even be challenged with an interview project, which requires you to demonstrate your software testing skills. You can read through our Katalon Blog for up-to-date information on the testing industry, especially automation testing, which will surely be useful in your QA interview.

As a leading automation testing platform, Katalon offers free Software Testing courses for both beginners and intermediate testers through Katalon Academy, a comprehensive knowledge hub packed with informative resources.

|