Software Testing Basics: Types, Process & Examples

Learn with AI

What is Software Testing?

Software testing is the process of evaluating a software application to ensure it works as expected and is free of defects.

At its core, it's about checking whether the software does what it’s supposed to do, under the right conditions, and gracefully handles the wrong ones.

The History of Software Testing

-

1940s–50s: Testing was essentially the same as debugging, with programmers manually running their code and fixing errors directly.

-

1960s: Testing began to separate from debugging, with the introduction of defined test cases and checks against software requirements.

-

1970s: Structured testing methods emerged, including white-box and black-box approaches, and different levels of testing such as unit, integration, system, and acceptance were clearly defined.

-

1980s: Formal test design techniques like equivalence partitioning and boundary value analysis became standard, while early automation tools and independent testing teams started to appear.

-

1990s: Test automation expanded significantly with GUI-based tools, regression testing became critical for frequent updates, and international standards like ISO and CMM encouraged more structured quality processes.

-

2000s: Agile methodologies and test-driven development pushed testing earlier in the development cycle, while open-source tools like JUnit and Selenium made automation widely accessible and continuous testing became a core mindset.

-

2010s: Testing became fully integrated with DevOps and CI/CD pipelines, shift-left approaches brought testers into earlier stages of development, and mobile, cloud, and cross-browser testing became increasingly important.

-

2020s: AI-powered testing tools enabled automated test generation, self-healing scripts, and predictive defect detection, with the focus shifting toward continuous quality, stronger security testing, and the continued importance of human exploratory testing.

Benefits of Software Testing

-

Detect bugs: the primary goal of software testing is to identify bugs before they impact users. Since modern apps rely on interconnected components, a single issue can trigger a chain reaction. Early detection minimizes the impact.

-

Maintain and improve software quality: testing ensures the software is stable, secure, and user-friendly. It also identifies areas for improvement and optimization.

-

Build trust and satisfaction: consistent testing creates a stable and dependable product that delivers positive user experience, which translates into user trust and satisfaction.

-

Identify vulnerabilities to mitigate risks: in high-stakes industries like finance, healthcare, and law, testing prevents costly errors that could harm users or expose companies to legal risks. It acts as a safety net, ensuring critical systems remain secure and functional.

And sometimes, users use your software in ways you could never expect. Testing is the best way to catch those unexpected "edge cases"—rare or unconventional scenarios that reveal hidden issues.

Types of Software Testing

There are two major types of software testing:

- Functional testing checks if software features work as expected.

- Non-functional testing checks if the software's non-functional aspects (e.g., stability, security, and usability) satisfy expectations.

There are many other testing types:

- Unit testing checks an individual unit in isolation from the rest of the application. A unit is the smallest testable part of any software.

- Integration testing checks the interaction between several individual units. These units usually have already passed unit testing.

- End-to-end testing checks the entire end-to-end workflow in the software

- System testing checks the entire system, including its functional and non-functional aspects

- Exploratory testing is where testers explore the software without any predefined goals, trying to find bugs spontaneously.

- Visual testing checks if the software visual aspect satisfies expectations.

- Regression testing checks if new code breaks existing features

- UI testing checks if the User Interface (UI) satisfies expectations.

- Black-box testing is where testers check the software without knowing its code structures

- White-box testing is where testers check the software with full knowing of its code structures.

- Acceptance testing evaluates the application against real-life scenarios.

- Cross-browser testing checks if the software works across browsers and environments.

- Performance testing checks if the software can perform under stress (high user volume/extreme usage).

📚Read more: Different types of software testing you should know

Approach to Software Testing

Testers have two approaches to software testing: manual testing vs automation testing. Each approach carries its own set of advantages and disadvantages that they must consider to optimize resources.

-

Manual Testing : Testers manually interact with the software step-by-step exactly like a real user would, observing whether any issues arise. Anyone can start doing manual testing by simply assuming the role of a user. However, manual testing is time-consuming because humans cannot execute tasks as quickly as machines — which is why automation testing is needed to accelerate the process.

-

Automation Testing : Instead of manually interacting with the system, testers use tools or write automation scripts that interact with the software on their behalf. The tester simply clicks “Run” and the script executes the rest of the testing process automatically.

Important Concepts in Software Testing

- Test Case – A set of conditions, inputs, and expected outputs designed to test a specific software function.

- Traceability Matrix – A document linking test cases to requirements to ensure full coverage.

- Test Script – A manual or automated step-by-step procedure for executing test cases.

- Test Suite – A collection of test cases designed to evaluate multiple aspects of a software system.

- Test Fixture (Test Data) – Predefined data and conditions used to maintain consistency during testing.

- Test Harness – A combination of software and test data used to test components in an isolated environment.

- Bug/Defect Life Cycle – The process a defect follows from discovery to resolution.

- Severity vs. Priority – Severity indicates the impact of a bug; priority indicates how urgently it should be fixed.

- Test Environment – The hardware, software, databases, and network setup required to execute tests.

- Test Plan vs. Test Strategy – The test plan details test execution, while the test strategy outlines the higher-level testing approach.

- Test Scenario – A high-level description of a real-world use case that needs to be tested.

- Test Data Management – The practice of creating, maintaining, and managing test data sets.

- Mocking and Stubbing – Techniques for simulating dependencies (APIs, databases, services) during isolated testing.

- Code Coverage – A metric that measures how much of the application’s code has been executed during tests.

- Smoke Testing vs. Sanity Testing – Smoke tests validate basic functionality; sanity tests verify targeted updates or fixes.

- Edge Cases and Boundary Testing – Testing extreme input values and system limits.

- Defect Reporting – The structured process of documenting and tracking software defects.

- Test Automation Frameworks – Structured guidelines for automated testing (e.g., data-driven, keyword-driven, hybrid).

- Continuous Testing in CI/CD – Integrating automated tests into the development pipeline to detect defects early.

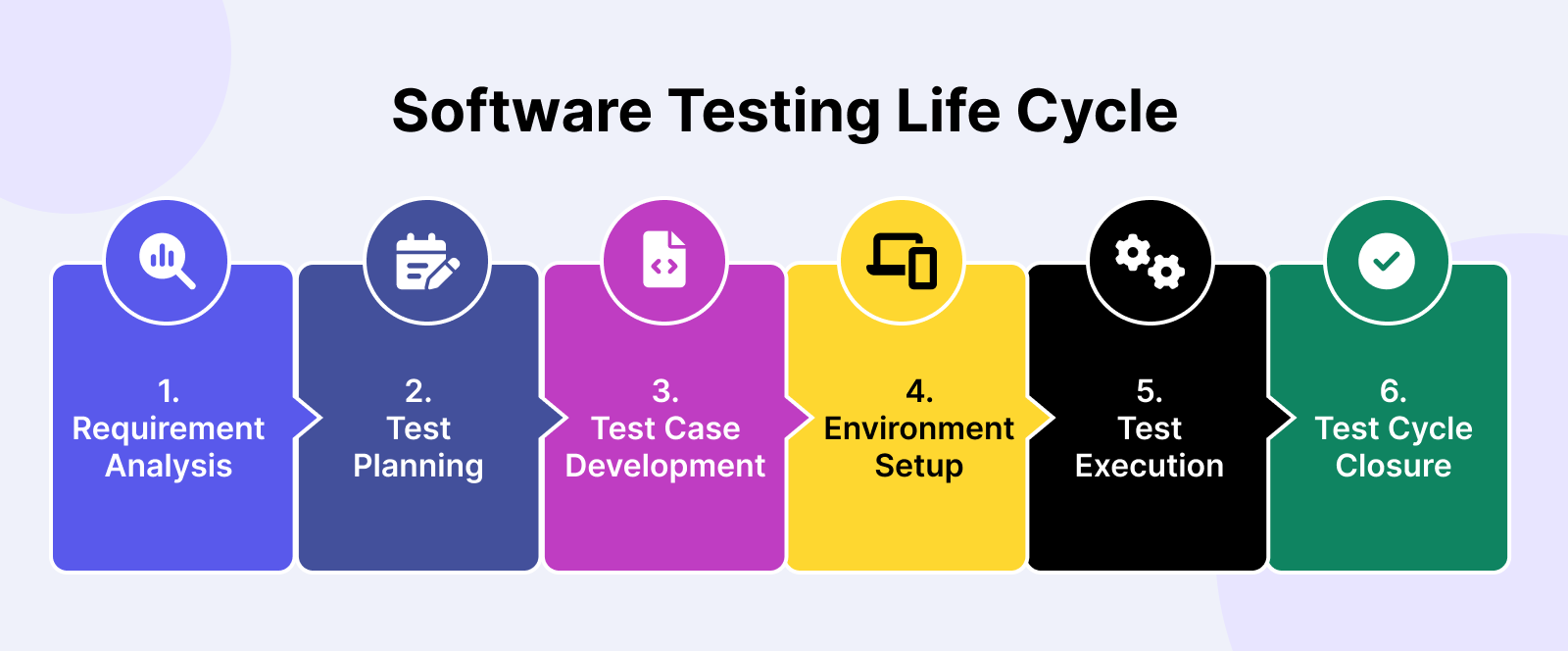

Software Testing Life Cycle

Many software testing initiatives follow a process known as the Software Testing Life Cycle (STLC) . The STLC consists of 6 key activities to ensure that all software quality goals are met, as shown below:

1. Requirement Analysis

In this stage, software testers work with stakeholders to identify and understand test requirements. Insights from these discussions are consolidated into the Requirement Traceability Matrix (RTM), which forms the foundation for building the test strategy.

There are three main people (the tres amigos) involved in this process:

- Product Owner: Represents the business side and defines the problem to be solved.

- Developer: Builds a solution that addresses the Product Owner’s problem.

- Tester: Ensures the solution works as intended and identifies potential issues.

2. Test Planning

After thorough analysis, a test plan is created. Test planning involves aligning with relevant stakeholders on the test strategy:

- Test objectives: Define attributes like functionality, usability, security, performance, and compatibility.

- Output and deliverables: Document the test scenarios, test cases, and test data to be produced and monitored.

- Test scope: Determine which areas and functionalities of the application will be tested (in-scope) and which ones won't (out-of-scope).

- Resources: Estimate the costs for test engineers, manual/automated testing tools, environments, and test data.

- Timeline: Establish expected milestones for test-specific activities along with development and deployment.

- Test approach: Assess the testing techniques (white box/black box testing), test levels (unit, integration, and end-to-end testing), and test types (regression, sanity testing) to be used.

3. Test Case Development

After defining the scenarios and functionalities to be tested, we start writing the test cases.

Here's what a basic test case looks like:

| Component | Details |

|---|---|

| Test Case ID | TC001 |

| Description | Verify Login with Valid Credentials |

| Preconditions | User is on the Etsy login popup |

| Test Steps | 1. Enter a valid email address. 2. Enter the corresponding valid password. 3. Click the "Sign In" button. |

| Test Data | Email: validuser@example.com Password: validpassword123 |

| Expected Result | Users should be successfully logged in and redirected to the homepage or the previously intended page. |

| Actual Result | (To be filled in after execution) |

| Postconditions | User is logged in and the session is active |

| Pass/Fail Criteria | Pass: Test passes if the user is logged in and redirected correctly. Fail: Test fails if an error message is displayed or the user is not logged in. |

| Comments | Ensure the test environment has network access and the server is operational. |

This is a test case to check Etsy's login.

When writing a test case, make sure your test cases clearly show what’s being tested, what the expected outcome is, and how to troubleshoot if bugs appear.

After that comes test case management, which involves tracking and organizing your test cases. You can do this using spreadsheets or tools like Xray for manual testing, or use automation tools such as Selenium, Cypress, or Katalon for faster results.

4. Test Environment Setup

Setting up the test environment involves preparing the software and hardware needed to test an app, like servers, browsers, networks, and devices.

For a mobile app, you’ll need:

-

Development environment for early testing:

- Tools like Xcode (iOS) or Android Studio (Android)

- Simulators/emulators for virtual testing

- Local databases and mock APIs

- CI tools to run automatic tests

-

Physical devices to catch real-world issues:

- Different models (e.g., iPhone, Galaxy)

- Various OS versions (e.g., iOS 14, Android 11)

- Tools like Appium for automated testing

-

Emulation environment for quick tests without physical devices:

- Android emulators and iOS simulators

- Various screen resolutions, RAM, and CPU configurations

- Debug tools in Xcode or Android Studio

5. Test Execution

With clear objectives in mind, the QA team writes test cases, test scripts, and prepares necessary test data for execution.

Tests can be executed manually or automatically. After the tests are executed, any defects found are tracked and reported to the development team, who promptly resolve them.

During execution, the test case goes through the following stages:

- Untested: The test case has not been executed yet at this stage.

- Blocked/On hold: This status applies to test cases that can’t be executed due to dependencies like unresolved defects, unavailable test data, system downtime, or incomplete components.

- Failed: This status indicates that the actual outcome didn’t match the expected outcome. In other words, the test conditions weren’t met, prompting the team to investigate and find the root cause.

- Passed: The test case was executed successfully, with the actual outcome matching the expected result. Testers love to see a lot of passed cases, as it signals good software quality.

- Skipped: A test case may be skipped if it’s not relevant to the current testing scenario. The reason for skipping is usually documented for future reference.

- Deprecated: This status is for test cases that are no longer valid due to changes or updates in the application. The test case can be removed or archived.

📚 Read More: A Guide To Understand Test Execution

6. Test Cycle Closure

Finally, you need a test report to document the details of what happened during the software testing process. In a test report, you can usually see 4 main elements:

- Visualizations: Charts, graphs, and diagrams to show testing trends and patterns.

- Performance: Track performance trends like execution times and success rates.

- Comparative analysis: Compare results across different software versions to identify improvements or regressions.

- Recommendations: Provide actionable insights on which areas need debugging attention.

Software testers will then gather to analyze the report, evaluate the effectiveness, and document key takeaways for future reference.

Popular Software Testing Models

The evolution of the testing model has been in parallel with the evolution of software development methodologies.

1. V-model

In the past, QA teams had to wait until the final development stage to start testing. Test quality was usually poor, and developers could not troubleshoot in time for product release.

The V-model solves that problem by engaging testers in every phase of development. Each development phase is assigned a corresponding testing phase. This model works well with the nearly obsolete Waterfall testing method.

On one side, there is “Verification”. On the other side, there is “Validation”.

- Verification is about “Are we building the product right?”

- Validation is about “Are we building the right product?”

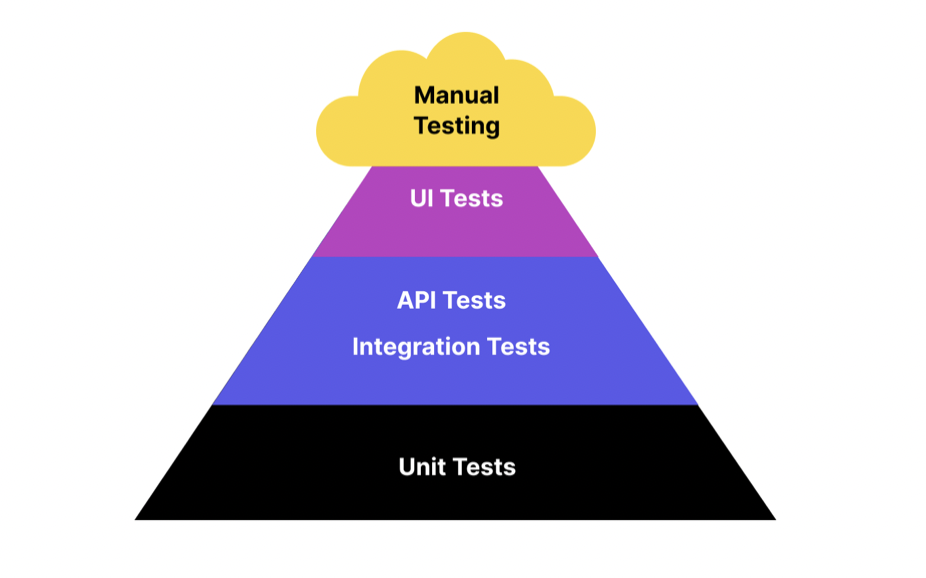

2. Test Pyramid model

As technology advances, the Waterfall model gradually gives way to the widely used Agile testing methods. Consequently, the V-model also evolved to the Test Pyramid model, which visually represents a 3-part testing strategy.

Most of the tests are unit tests, aiming to validate only the individual components. Next, testers group those components and test them as a unified entity to see how they interact. Automation testing can be leveraged at these stages for optimal efficiency.

📚 Read More: Test Pyramid: A Guide To Implement in Practice

3. The Honeycomb Model

The Honeycomb model is a modern approach to software testing in which Integration testing is a primary focus, while Unit Testing (Implementation Details) and UI Testing (Integrated) receive less attention. This software testing model reflects an API-focused system architecture as organizations move towards cloud infrastructure.

Manual Testing vs. Automated Software Testing: Which One to Choose?

| Aspect | Manual Testing | Automation Testing |

|---|---|---|

| Definition | Testing conducted manually by a human without the use of scripts or tools. | Testing conducted using automated tools and scripts to execute test cases. |

| Execution Speed | Slower, as it relies on human effort. | Faster, as tests are executed by automated tools. |

| Initial Investment | Low, as it primarily requires human resources. | High, due to the cost of tools and the time required to write scripts. |

| Accuracy | Prone to human error, especially in repetitive tasks. | More accurate, as it eliminates human error in repetitive tasks. |

| Test Coverage | Limited by human ability to perform extensive and repetitive tests. | Extensive, as automated tests can run repeatedly with large data sets. |

| Usability Testing | Effective, relying on human judgment and feedback. | Ineffective, as tools cannot judge user experience and intuitiveness. |

| Exploratory Testing | Highly effective, as humans can explore the application creatively. | Ineffective, as it requires human intuition and exploratory skills. |

| Regression Testing | Time-consuming and labor-intensive. | Highly efficient, as tests can be rerun automatically with each code change. |

| Maintenance | Lower, but can become tedious with frequent changes. | Requires significant maintenance to update scripts with application changes. |

| Initial Setup Time | Minimal, as it does not require scripting or tool setup. | High, due to the need to develop test scripts and set up tools. |

| Skill Requirement | Requires knowledge of the application and testing principles. | Requires programming skills and knowledge of automation tools. |

| Cost Efficiency | More cost-effective for small-scale or short-term projects. | More cost-effective for large-scale or long-term projects with repetitive tests. |

| Reusability of Tests | Limited, as manual tests need to be recreated each time. | High, as automated tests can be reused across different projects. |

📚 Read More: Automated Testing vs Manual Testing: A Detailed Comparison

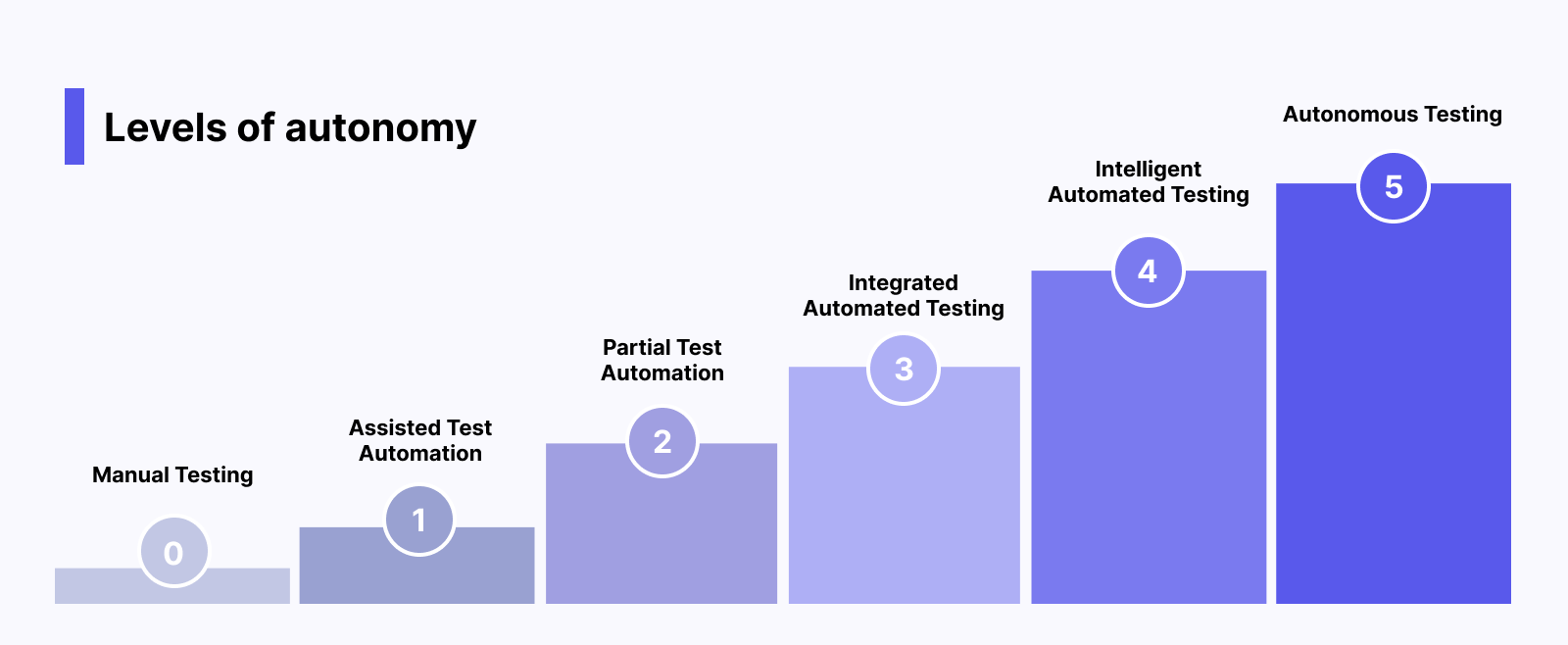

Is Automated Testing Making Manual Testing Obsolete?

Automated testing takes software testing to the next level, enabling QA teams to test faster and more efficiently. So is it making manual testing a thing of the past?

The short-term answer is “No”.

The long-term answer is “Maybe”.

Manual testing is always needed because only humans can evaluate the application’s UX and supervise automation testing.

However, AI technology is gradually changing the landscape. Smart testing features have been added to many automated software testing tools to drastically reduce the need for human intervention.

In the future, we can expect to reach Autonomous Testing, where machines completely take control and perform all testing activities. Many software testing tools have leveraged LLMs to bring us closer to this autonomous testing future.

Top Software Testing Tools with Best Features

1. Katalon

Katalon allows QA teams to author web, mobile, and desktop apps and UI and API automated tests, execute those tests on preconfigured cloud environments and maintain them, all in one unified platform, without any additional third-party tools. The Katalon Platform is among the best commercial automation tools for functional software testing on the market.

- Test Planning: Ensure alignment between requirements and testing strategy. Maintain focus on quality by connecting TestOps to project requirements, business logic, and release planning. Optimize test coverage and execute tests efficiently using dynamic test suites and smart scheduling.

- Test Authoring: Katalon Studio combines low-code simplicity with full-code flexibility (this means anyone can create automation test scripts and customize them as they want). Automatically capture test objects, properties, and locators to use.

- Test Organization:TestOps organizes all your test artifacts in one place: test cases, test suites, environments, objects, and profiles for a holistic view. Seamlessly map automated tests to existing manual tests through one-click integrations with tools like Jira and X-ray.

- Test Execution: Instant web and mobile test environments. TestCloud provides on-demand environments for running tests in parallel across browsers, devices, and operating systems, while handling the heavy lifting of setup and maintenance. The Runtime Engine streamlines execution in your own environment with smart wait, self-healing, scheduling, and parallel execution.

- Test Execution: Real-time visibility and actionable insights. Quickly identify failures with auto-detected assertions and dive deeper with comprehensive execution views. Gain broader insights with coverage, release, flakiness, and pass/fail trend reports. Receive real-time notifications and leverage the 360-degree visibility in TestOps for faster, clearer, and more confident decision-making.

Download Katalon and witness its power in action

Check out a video from Daniel Knott - one of the top influencers in the software testing field - talking about the capabilities of Katalon, and especially its innovative AI features:

2. Selenium

Selenium is a versatile open-source automation testing library for web applications. It is popular among developers due to its compatibility with major browsers (Chrome, Safari, Firefox) and operating systems (Macintosh, Windows, Linux).

Selenium is a versatile open-source automation testing library for web applications. It is popular among developers due to its compatibility with major browsers (Chrome, Safari, Firefox) and operating systems (Macintosh, Windows, Linux).

Selenium simplifies testing by reducing manual effort and providing an intuitive interface for creating automated tests. Testers can use scripting languages like Java, C#, Ruby, and Python to interact with the web application. Key features of Selenium include:

- Selenium Grid: A distributed test execution platform that enables parallel execution on multiple machines, saving time.

- Selenium IDE: An open-source record and playback tool for creating and debugging test cases. It supports exporting tests to various formats (JUnit, C#, Java).

- Selenium WebDriver: A component of the Selenium suite used to control web browsers, allowing simulation of user actions like clicking links and entering data.

Website: Selenium

GitHub: SeleniumHQ

3. Appium

Appium is an open-source automation testing tool specifically designed for mobile applications. It enables users to create automated UI tests for native, web-based, and hybrid mobile apps on Android and iOS platforms using the mobile JSON wire protocol. Key features include:

- Supported programming languages: Java, C#, Python, JavaScript, Ruby, PHP, Perl

- Cross-platform testing with reusable test scripts and consistent APIs

- Execution on real devices, simulators, and emulators

- Integration with other testing frameworks and CI/CD tools

Appium simplifies mobile app testing by providing a comprehensive solution for automating UI tests across different platforms and devices.

Website: Appium Documentation

Conclusion

Ultimately, the goal of software testing is to deliver applications that meet and exceed user expectations. A comprehensive testing strategy is one that combines the best of manual and automation testing.

FAQs on Software Testing

1. What is software testing?

Software testing is the process of evaluating a software application to verify it meets user expectations and works as intended. It involves executing the software under controlled conditions, across different scenarios and environments, to detect defects before release

2. What are the benefits of software testing for quality and user trust?

Benefits include bug detection before users are affected, improved software stability, usability, and security. It also builds user trust and satisfaction, especially in industries with high risk (e.g. finance, healthcare) where quality is critical

3. What types of software testing exist?

The article identifies two main categories:

-

Functional testing to verify features work as expected

-

Non‑functional testing covering performance, usability, security, etc.

It also references specific types like unit testing (isolating smallest components) and performance testing (stress/load)

4. How do manual and automated testing compare?

Manual testing involves human testers stepping through the application as real users would, which is realistic but time‑intensive. Automated testing uses scripts and tools to execute tests faster and more consistently, ideal for regression and repetitive scenarios

6. What does the Software Testing Life Cycle (STLC) involve?

The STLC follows a structured, step‑by‑step process from requirement analysis, test planning and design, test execution, and result analysis, to closing the test cycle. Each phase contributes to ensuring software aligns with business requirements and maintains high quality

6. Does QA require coding?

Manual testing doesn’t require coding. Automation testing does, as testers write scripts to automate tests. While coding isn’t always needed, it’s becoming a valuable skill for QA professionals.

|