What is Test Strategy? Guide (With Sample)

Learn with AI

Each comes with their own advantages and disadvantages. The former is highly customizable, but requires a significant level of technical expertise to pull off, while the latter comes with out-of-the-box features that you can immediately enjoy, but there is some investment required.

A test strategy defines the overall approach and key principles for software testing. It provides a structured framework to guide the testing team in conducting tests efficiently and effectively. In simpler terms, a test strategy helps you know what to test, how to test, and most importantly, why you are testing them in the first place.

In this article, we’ll walk you through everything you need to know to build a robust test strategy.

What is a Test Strategy?

A test strategy is a high-level document that outlines the overall approach and guiding principles for software testing. It provides a structured framework that directs the testing team on how to efficiently and effectively conduct tests, ensuring that all key aspects of the software are validated.

Benefits of Test Strategy

- Provides clear direction and focus for testing activities.

- Identifies and mitigates critical risks early.

- Streamlines processes, optimizing resource use and timelines.

- Promotes adherence to industry and regulatory standards.

- Enhances teamwork by aligning all members with project goals.

- Aids in effective allocation of resources.

- Facilitates efficient monitoring and reporting of test progress.

- Ensures comprehensive validation of all critical functionalities.

Types of Test Strategy

We identify 3 major types of test strategies:

1. Static vs Dynamic Test Strategy

- Static testing reviews code and documents without execution, catching issues early.

- Dynamic testing runs the software to check its behavior in real scenarios.

Benefits:

- Static testing helps catch defects before they become costly.

- Dynamic testing ensures the software works as expected in real use cases.

2. Preventive vs. Reactive Test Strategy

This strategy balances preventing defects before they occur and reacting to unexpected issues after deployment.

Benefits:

- Preventive testing addresses known risks early, reducing costs.

- Reactive testing handles unforeseen issues, especially during integration or real-world use.

Since not all problems can be predicted, a mix of both strategies is essential to catch hidden or emerging defects.

3. Hybrid Test Strategy

Here we want to strike a balance between manual testing and automation testing.

Why balance them? Manual testing is awesome as a starting point. A common best practice is conducting exploratory testing sessions to find bugs. After that, through automation feasibility assessment, the team decides if that specific test scenario is worth automating or not. If the answer is yes, the team can leverage automation test scripts/testing tools to automate it for future executions.

📚 Read More: How to do automation testing?

What To Include in a Test Strategy Document

1. Test level

Start your test strategy with the concept of a test pyramid, which consists of 3 levels:

- Unit tests (Base) – Test individual components in isolation. Fast, automated, and high in coverage (70-80%).

- Integration tests (Middle) – Verify data flow between components. Moderate speed and coverage (15-20%).

- E2E tests (Top) – Validate the full system. Slow, complex, but crucial for key workflows (5-10%).

A balanced strategy prioritizes unit tests for efficiency, with fewer integration and E2E tests to maintain stability without slowing development.

2. Objectives and scope

- Set Objectives – Determine what to validate: functional (features) or non-functional (security, performance, usability). Align with project requirements.

- Define Scope – Outline what will and won’t be tested to avoid scope creep. Include:

- Features to test

- Features to exclude

- Testing types

- Test environment

Prioritize Critical Areas:

- High-risk and frequently used features

- User flows that drive engagement

- Third-party dependencies (APIs, payments)

- Recent changes prone to defects

3. Testing types

There are so many types of testing to choose from, each serving a different purpose. Testing types can be divided into the following groups:

- By Application Under Test (AUTs): Grouping tests according to the type of software being evaluated, such as web, mobile, or desktop applications.

- By Application Layer: Organizing tests based on layers in traditional three-tier software architecture, including the user interface (UI), backend, or APIs.

- By Attribute: Categorizing tests based on the specific features or attributes being evaluated, such as visual, functional, or performance testing.

- By Approach: Classifying tests by the overall testing method, whether manual, automated, or driven by AI.

- By Granularity: Grouping tests by the scope and level of detail, like unit testing or end-to-end testing.

- By Testing Techniques: Organizing tests based on the methods used for designing and executing tests. This is more specific than general approaches and includes techniques like black-box, white-box, and gray-box testing.

📚 Read More: 15 Types of QA Testing You Should Know

4. Test approach

Agile is the go-to approach for most QA teams today. Instead of treating testing as a separate phase, it is integrated throughout the development process. Testing occurs continuously at each step, enabling testers to work closely with developers to ensure fast, frequent, and high-quality delivery.

Agile allows for shift-left testing where you essentially “push” testing to earlier stages of development and weave development with testing.

📚 Read More: What is Shift Left Testing? How To Shift Left Your Test Strategy?

5. Test Criteria

The criteria act as checkpoints to ensure the product is both stable and testable before major testing efforts, and that it is ready to move forward after testing is complete.

Entry Criteria (Required system conditions before testing starts):

- Code complete, only bug fixes allowed

- Unit & integration tests passed

- Core functionality (login, navigation, etc.) works

- Test environment set up

- Bugs documented

- Test data prepared

Exit Criteria (Required system conditions before testing ends):

- All test cases executed

- Critical issues fixed, defects within limits

- Key workflows validated

- Test reports reviewed

- Stable, deployable build

📚 Read More: How to make a test execution report?

6. Hardware-software configuration

This is your test environment: where the actual testing takes place. They should mirror the production environment as closely as possible, and there should be additional tools/features to assist testers with their job. They have 2 major parts:

- Hardware: servers, computers, mobile devices, or specific hardware setups such as routers, network switches, and firewalls

- Software: operating systems, browsers, databases, APIs, testing tools, third-party services, and dependent software packages

For performance testing specifically, you’ll also need to set up the network components to simulate real-world networking conditions (network bandwidth, latency simulations, proxy settings, firewalls, VPN configurations, or network protocols).

Here’s an example for you:

|

Category |

Mobile Testing |

Web Testing |

|

Hardware |

- iPhone 13 Pro (iOS 15) - iPad Air (iOS 14) - Google Pixel 6 (Android 12) - Samsung Galaxy S21 (Android 11) |

- Windows 10: Intel Core i7, 16GB RAM, 256GB SSD - macOS Monterey: Apple M1 Chip, 16GB RAM, 512GB SSD |

|

Software |

- Google Chrome (across versions) |

|

|

Network Config |

- Simulate 3G, 4G, 5G, and high-latency environments - Wi-Fi and Ethernet connections |

|

|

Database |

MySQL 8.0 |

|

|

CI/CD Integration |

Jenkins or GitLab CI |

|

7. Testing tools

If you go with manual testing, you would need a test management system to keep track of all of those manual test results. Most commonly we have Jira as an all-in-one project management tool to help with bug tracking.

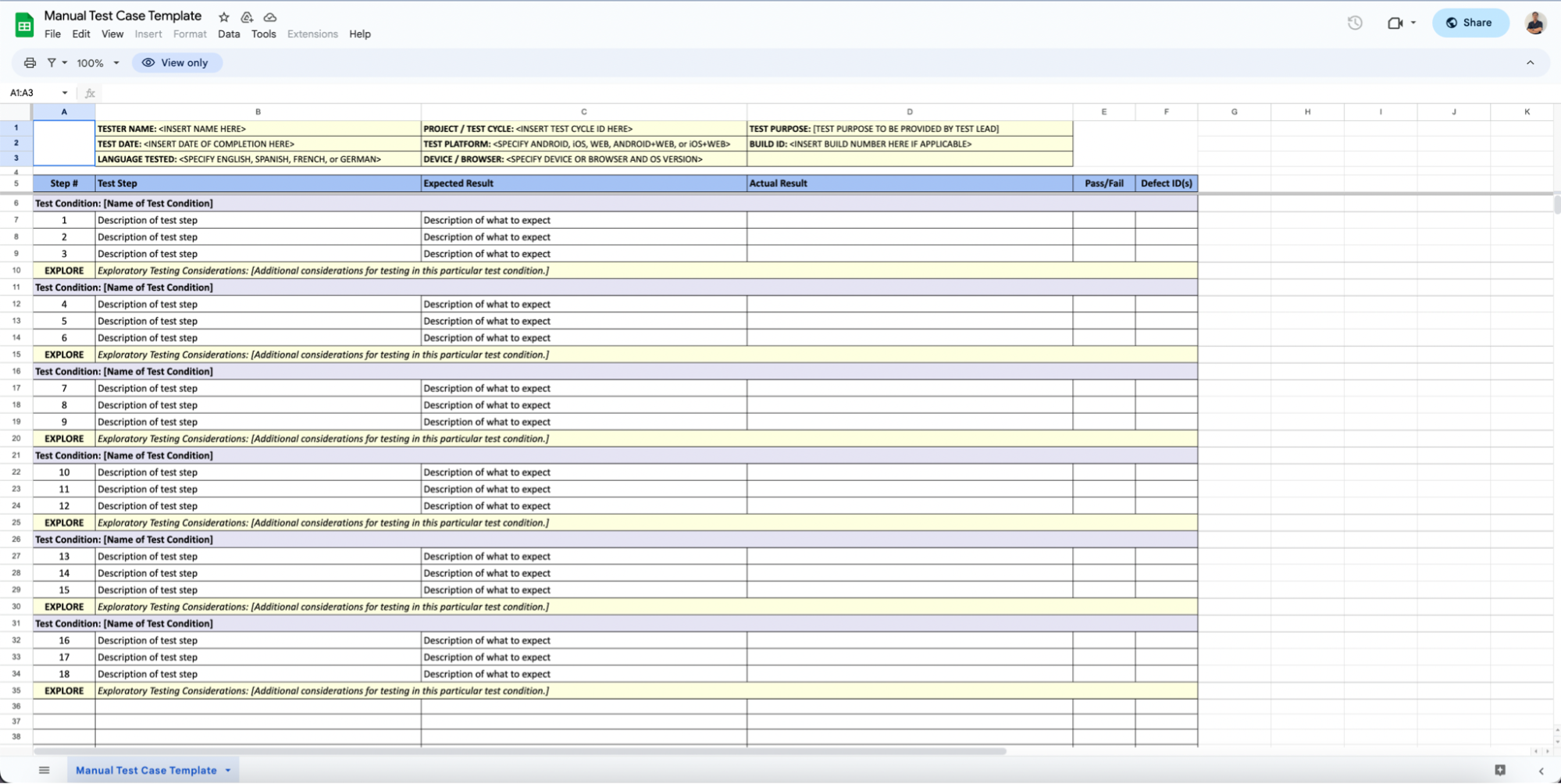

After testing, they can document their bug in a Google Sheet document that looks like this.

Of course, as you scale, this approach proves to be inefficient. At a certain point, a dedicated test management system that seamlessly integrates with other test activities (including test creation, execution, reporting) is a better option. This system should also support automation testing.

Imagine a system where you can write your test scripts, store all of the test objects, test data, artifacts, where you can also run your tests in the environment of your choice, then generate detailed reports for your findings.

To achieve such a level of comprehensiveness, testers have 2 options:

- Build a tailor-made test automation framework from scratch

- Find a vendor-based solution

Each comes with their own advantages and disadvantages. The former is highly customizable, but requires a significant level of technical expertise to pull off, while the latter comes with out-of-the-box features that you can immediately enjoy, but there is some investment required.

The real question is: what is the ROI you can get if you go with one of those choices? Check out our article on how to calculate test automation ROI.

8. Test deliverables

This is what success looks like. Test deliverables come from test objectives you set out earlier.

Define what artifacts and documents should be produced during the testing process to communicate the progress and findings of testing activities. As test strategy is a high-level document, you don’t need to go into minute details of each deliverable, but rather only a brief outline of the items that the team wants to create.

9. Testing measurements and metrics

Establish the key performance indicators (KPI) and success metrics for the project. These metrics will not only be the means to measure the efficiency and quality of the testing process but also provide a common goal and language of communication among team members.

Some common testing metrics include:

- Test Coverage: Measures the percentage of the codebase that is tested by your suite of tests.

- Defect Density: Indicates the number of defects found in a specific module or unit of code, typically calculated as defects per thousand lines of code (KLOC). A lower defect density reflects better code quality, while a higher one suggests more vulnerabilities.

- Defect Leakage: Refers to defects that escape detection in one testing phase and are found in subsequent phases or after release.

- Mean Time to Failure (MTTF): Represents the average time that a system or component operates before failing.

These metrics will later be visualized in a test report.

10. Risks

List out the potential risks and clear plans to mitigate them, or even contingency plans to adopt in case these risks do show up in reality.

Testers generally conduct a level of risk analysis (= probability of it occurring x impact) to see which risk should be addressed in priority.

For example, after planning, the team realizes that the timeline is extremely tight, but they are lacking the technical expertise to deliver the objectives. This is a High Probability High Impact scenario, and they must have a contingency plan: either changing the objectives, investing into the team’s expertise, or outsourcing entirely to meet the delivery date.

All of these items in the document should be carefully reviewed by the business team, the QA Lead, and the Development Team Lead. From this document, you will be able to develop detailed test plans for sub-projects, or for each iteration of the sprint.

📚 Read More: Risk-based approach to regression testing: A simple guide

Sample Test Strategy Document

Here’s a sample test strategy for your reference:

|

Section |

Details |

|

1. Product, Revision, and Overview |

- Product Name: E-commerce Web Application - Revision: v1.5 - Overview: The product is an online e-commerce platform allowing users to browse products, add items to the cart, and make secure purchases. It includes a responsive web interface, a mobile app, and backend systems for inventory and payment processing. |

|

2. Product History |

- Previous Versions: v1.0, v1.2, v1.3 - Defect History: Previous versions had issues with payment gateway integration and cart item persistence. These issues have been addressed through unit and integration testing, leading to improvements in overall system reliability. |

|

3. Features to Be Tested |

- User Features: Product search, cart functionality, user registration, and checkout. - Application Layer: Frontend (React.js), Backend (Node.js), Database (MySQL), API integrations. - Mobile App: Shopping experience, push notifications. - Server: Load balancing, database synchronization. |

|

4. Features Not to Be Tested |

- Third-party Loyalty Program Integration: This will be tested in a separate release cycle. - Legacy Payment Method: No longer supported and excluded from testing in this release. |

|

5. Configurations to Be Tested |

- Mobile: iPhone 13 (iOS 15), Google Pixel 6 (Android 12). - Desktop: Windows 10, macOS Monterey. - Browsers: Chrome 95, Firefox 92, Safari 15, Edge 95. Excluded Configurations: Older versions of Android (<9.0) and iOS (<12). |

|

6. Environmental Requirements |

- Hardware: Real mobile devices, desktop systems (Intel i7, Apple M1). - Network: Simulated network conditions (3G, 4G, Wi-Fi). - Software: Testing tools (Selenium, Appium, JMeter). - Servers: Cloud-hosted environments on AWS for testing scalability. |

|

7. System Test Methodology |

- Unit Testing: Verify core functions like search, add-to-cart. - Integration Testing: Test interactions between the cart, payment systems, and inventory management. - System Testing: Full end-to-end user scenarios (browse, add to cart, checkout, receive confirmation). - Performance Testing: Stress testing with JMeter to simulate up to 5,000 concurrent users. - Security Testing: OWASP ZAP for vulnerability detection. |

|

8. Initial Test Requirements |

- Test Strategy: Written by QA personnel and reviewed by the product team. - Test Environment Setup: Environments must be fully configured, including staging servers, test data, and mock payment systems. - Test Data: Create dummy users and product listings for system-wide testing. |

|

9. System Test Entry Criteria |

- Basic Functionality Works: All core features (search, login, cart) must function. - Unit Tests Passed: 100% of unit tests must pass without error. - Code Freeze: All features must be implemented and code must be checked into the repository. - Known Bugs Logged: All known issues must be posted to the bug-tracking system. |

|

10. System Test Exit Criteria |

- All System Tests Executed: All planned tests must be executed. - Pass Critical Scenarios: All "happy path" scenarios (user registration, product purchase) must pass. - Successful Build: Executable builds must be generated for all supported platforms. - Zero Showstopper Bugs: No critical defects or blockers. - Maximum Bug Threshold: No more than 5 major bugs and 10 minor bugs. |

|

11. Test Deliverables |

- Test Plan: Detailed plan covering system, regression, and performance tests. - Test Cases: Documented test cases in Jira/TestRail. - Test Execution Logs: Record of all tests executed. - Defect Reports: Bug-tracking system reports from Jira. - Test Coverage Report: Percentage of features and code covered by tests. |

|

12. Testing Measurements & Metrics |

- Test Coverage: Target 95% coverage across unit, integration, and system tests. - Defect Density: Maintain a defect density of < 1 defect per 1,000 lines of code. - Performance: Ensure 2-second or less response times for key transactions. - Defect Leakage: Ensure no more than 2% defect leakage into production. |

|

13. Risks |

- Payment Gateway Instability: Could cause transaction failures under high load. - Cross-Browser Issues: Potential inconsistencies across older browser versions. - High User Load: Performance degradation under concurrent users > 5,000. - Security: Risk of vulnerabilities due to new user authentication features. |

|

14. References |

- Product Documentation: Internal API documentation for developers. - Test Tools Documentation: Selenium and JMeter configuration guides. - External References: OWASP guidelines for security testing. |

When creating a test strategy document, you can create a table with all of the items listed above, and have a brainstorming session with the important stakeholders (project manager, business analyst, QA Lead, and the Development Team Lead) to fill in the necessary information for each item. You can use the table below as a starting point:

Test Plan vs. Test Strategy

The test strategy document gives a higher level perspective than the test plan, and contents in the test plan must be aligned with the direction of the test strategy.

Test strategy provides general methods for product quality, tailored to different software types, organizational needs. quality policy compliance, and the overall testing approach.

The test plan, on the other hand, is created for specific projects, and considers goals, stakeholders, and risks.

In Agile development, a master plan for the project can be made, with specific sub-plans for each iteration. The table below provides a detailed comparison between the two:

|

Test Strategy |

Test Plan |

|

|

Purpose |

Provides a high-level approach, objectives, and scope of testing for a software project |

Specifies detailed instructions, procedures, and specific tests to be conducted |

|

Focus |

Testing approach, test levels, types, and techniques |

Detailed test objectives, test cases, test data, and expected results |

|

Audience |

Stakeholders, project managers, senior testing team members |

Testing team members, test leads, testers, and stakeholders involved in testing |

|

Scope |

Entire testing effort across the project |

Specific phase, feature, or component of the software |

|

Level of Detail |

Less detailed and more abstract |

Highly detailed, specifying test scenarios, cases, scripts, and data |

|

Flexibility |

Allows flexibility in accommodating changes in project requirements |

Relatively rigid and less prone to changes during the testing phase |

|

Longevity |

Remains relatively stable throughout the project lifecycle |

Evolves throughout the testing process, incorporating feedback and adjustments |

How Katalon Fits in With Any Test Strategy

Katalon is a comprehensive solution that supports test planning, creation, management, execution, maintenance, and reporting for web, API, desktop, and even mobile applications across a wide variety of environments, all in one place, with minimal engineering and programming skill requirements. You can utilize Katalon to support any test strategy without having to adopt and manage extra tools across teams.

Katalon allows QA teams to quickly move from manual testing to automation testing thanks to the built-in keywords feature. These keywords are essentially ready-to-use code snippets that you can quickly drag-and-drop to construct a full test script without having to write any code. There is also the record-and-playback feature that records the sequence of action you take on your screen then turns it into an automated test script that you can re-execute across a wide range of environments.

After that, all of the test objects, test cases, test suites, and test artifacts created are managed in a centralized Object Repository, enabling better test management. You can even map automated tests to existing manual tests thanks to Jira and Xray integration.

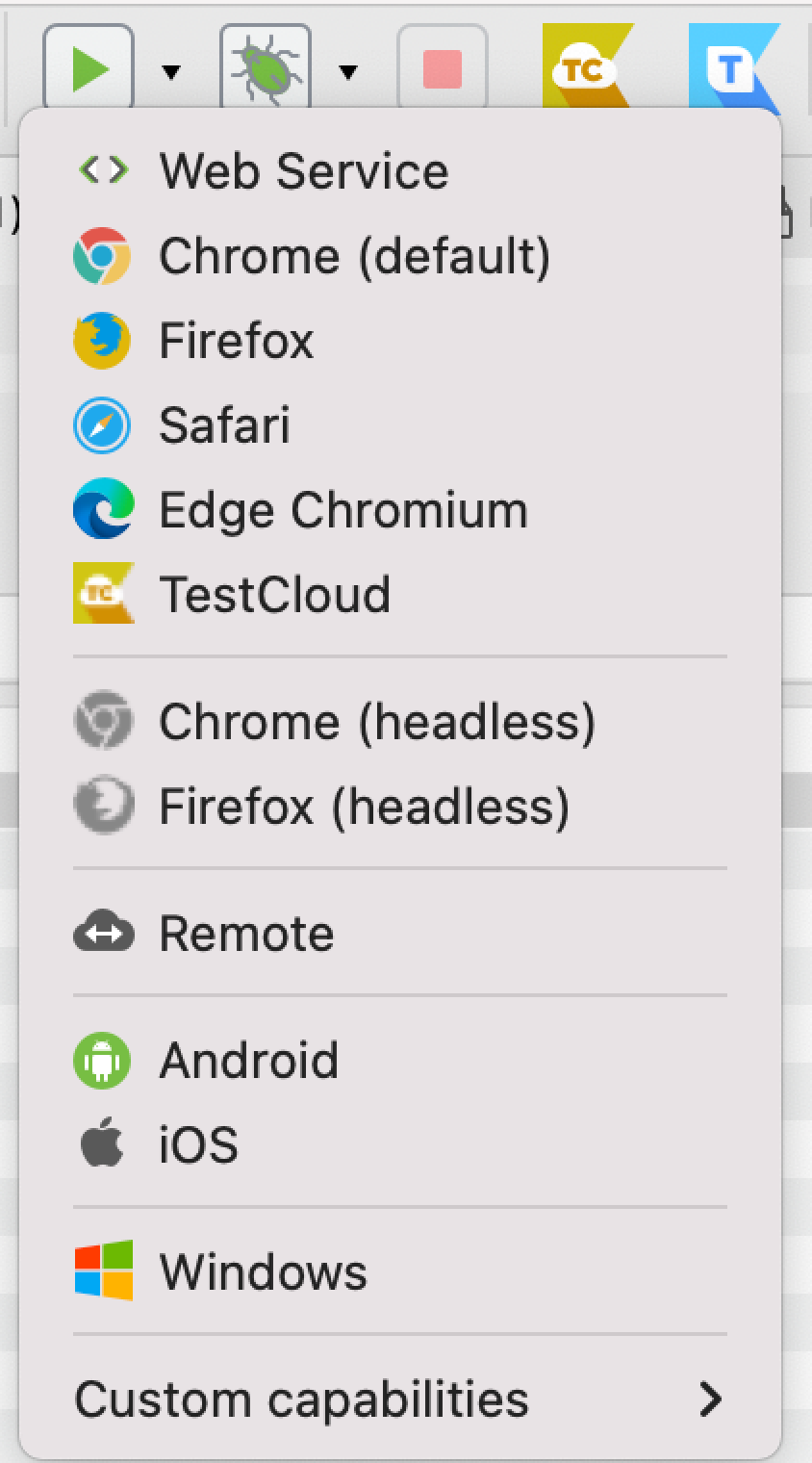

For test execution, Katalon makes it easy to run tests in parallel across browsers, devices, and OS, while everything related to setup and maintenance is already preconfigured. AI-powered features such as Smart Wait, self-healing, scheduling, and parallel execution enable effortless test maintenance.

Finally, for test reporting, Katalon generates detailed analytics on coverage, release, flakiness, and pass/fail trend reports to make faster, more confident decisions.

|

FAQs on Test Strategy

What is a Test Strategy in software testing?

A test strategy is a high-level document that defines the overall approach, key principles, scope, and objectives for software testing.

It provides a structured framework to guide the testing team in conducting tests efficiently and effectively, helping to determine what, how, and why testing is performed.

What are the key benefits of implementing a Test Strategy?

Implementing a test strategy provides clear direction for testing activities, identifies and mitigates critical risks early, streamlines processes for optimized resource use, promotes adherence to industry standards, enhances teamwork by aligning goals, aids in efficient resource allocation, facilitates monitoring of test progress, and ensures comprehensive validation of functionalities.

What are the main types of Test Strategies?

The article identifies three major types:

1. Static vs. Dynamic testing (reviewing code without execution vs. running software to check behavior).

2. Preventive vs. Reactive testing (addressing known risks early vs. handling unforeseen issues after deployment).

3. Hybrid testing (balancing manual testing with test automation).

What information should be included in a comprehensive Test Strategy document?

A test strategy document should include test levels (like the test pyramid: unit, integration, E2E), defined objectives and scope, various testing types (by application, layer, attribute, approach, granularity, techniques), the chosen test approach (e.g., Agile, Shift-Left), clear entry and exit criteria, hardware-software configurations, selected testing tools, anticipated test deliverables, key testing measurements and metrics, and identified risks with mitigation plans.

How does a Test Strategy differ from a Test Plan?

A Test Strategy is a high-level document that outlines the general approach to testing across an entire project or organization, remaining relatively stable.

In contrast, a Test Plan is a detailed, project-specific document that specifies the concrete instructions, procedures, test cases, and data for a particular phase, feature, or component of the software, and can evolve throughout the testing process.