Integration Testing: How-to, Examples & Free Test Plan (2025)

Learn with AI

Components that work well on their own don't always work well when integrated with each other. That's why integration testing is essential in your testing project.

In this article, I'll show you:

- What integration testing is

- Why integration testing is crucial in any testing project

- The different types of integration testing and how to do each of them

- Integration testing best practices

Let's dive in!

What is integration testing?

Integration testing is a type of testing in which multiple parts of a software system are gradually integrated and then tested as a group.

The goal of integration testing is to check whether different software components work seamlessly with each other.

Example of integration testing

Let's say that you are testing a software system with 10 different modules.

Each of these modules has been unit tested. They all pass, meaning they work well individually. That's a good sign. However, can we be sure that those individual parts will work smoothly together?

Conflicts can always arise — just like how people who work well individually may not always work well as a team.

Once these modules are integrated, things can go wrong in several ways, such as:

- Inconsistent code logic

- Inconsistent data rules

- Conflicts with third-party services

- Inadequate exception handling

That's why testers must perform integration testing to uncover those scenarios. Out of the 10 modules, testers can first choose two modules to integrate. If these modules interact smoothly, they proceed with another pair, and so on, until all modules are tested.

Approaches to integration testing

There are 2 common approaches to integration testing:

- Big Bang Approach

- Incremental Approach

1. Big Bang integration testing

Big Bang Integration testing is an approach in which all modules are integrated and tested at once as a single combined system.

Big Bang integration testing is not performed until all components have successfully passed unit testing.

Advantages of Big Bang integration testing:

- Suitable for simple and small systems with low dependency among components.

- Requires little to no planning in advance.

- Easy to set up since all modules are integrated simultaneously.

- Reduced coordination and management effort as testing happens in one major phase.

Disadvantages of Big Bang integration testing:

- Costly and time-consuming for large systems since testing cannot begin until every module is developed.

- Late defect detection due to delayed testing.

- Difficult to isolate and pinpoint bugs in specific modules.

- Debugging becomes challenging due to the heavy integration of multiple modules.

Best Practices when using Big Bang testing:

- Define expected interactions between units before testing to reduce missed defects.

- Use detailed logging for easier fault localization.

- Reserve Big Bang testing for small and simple applications.

📚 Read More: Big Bang Integration Testing: A Complete Guide

2. Incremental integration testing

Incremental integration testing is an approach in which 2 or more modules with closely related logic are grouped and tested first, then gradually expanded to other modules until the entire system is integrated and tested.

It is more strategic than Big Bang testing because it requires detailed planning in advance.

Advantages:

- Earlier bug detection because modules are integrated and tested as soon as they are developed.

- Easier root cause analysis since modules are tested in smaller groups.

- More flexible overall testing strategy.

Disadvantages:

- Some components may not be developed yet, requiring the creation of stubs and drivers as temporary substitutes.

- You need a complete understanding of the system structure before breaking it into smaller units.

Types of incremental integration testing

You can further divide incremental integration testing into 3 approaches:

- Bottom-up approach: test low-level components first, then move upward.

- Top-down approach: test high-level components first, then move downward.

- Hybrid approach: combines both top-down and bottom-up strategies.

The distinction between low-level and high-level components depends on their position in the system hierarchy:

- Low-level components handle fundamental or simple tasks (e.g., data structures, utility functions).

- High-level components handle complex, system-wide operations such as processing, managing data, or securing workflows.

If you apply this to an eCommerce website, the breakdown looks like this:

| Aspect | Low-Level Modules | High-Level Modules |

| Complexity | Simple functionalities | Complex, multi-functional |

| Scope | Focused on specific tasks | Comprehensive functionalities |

| Granularity | Smaller and modular | Larger and more integrated |

| Examples |

|

|

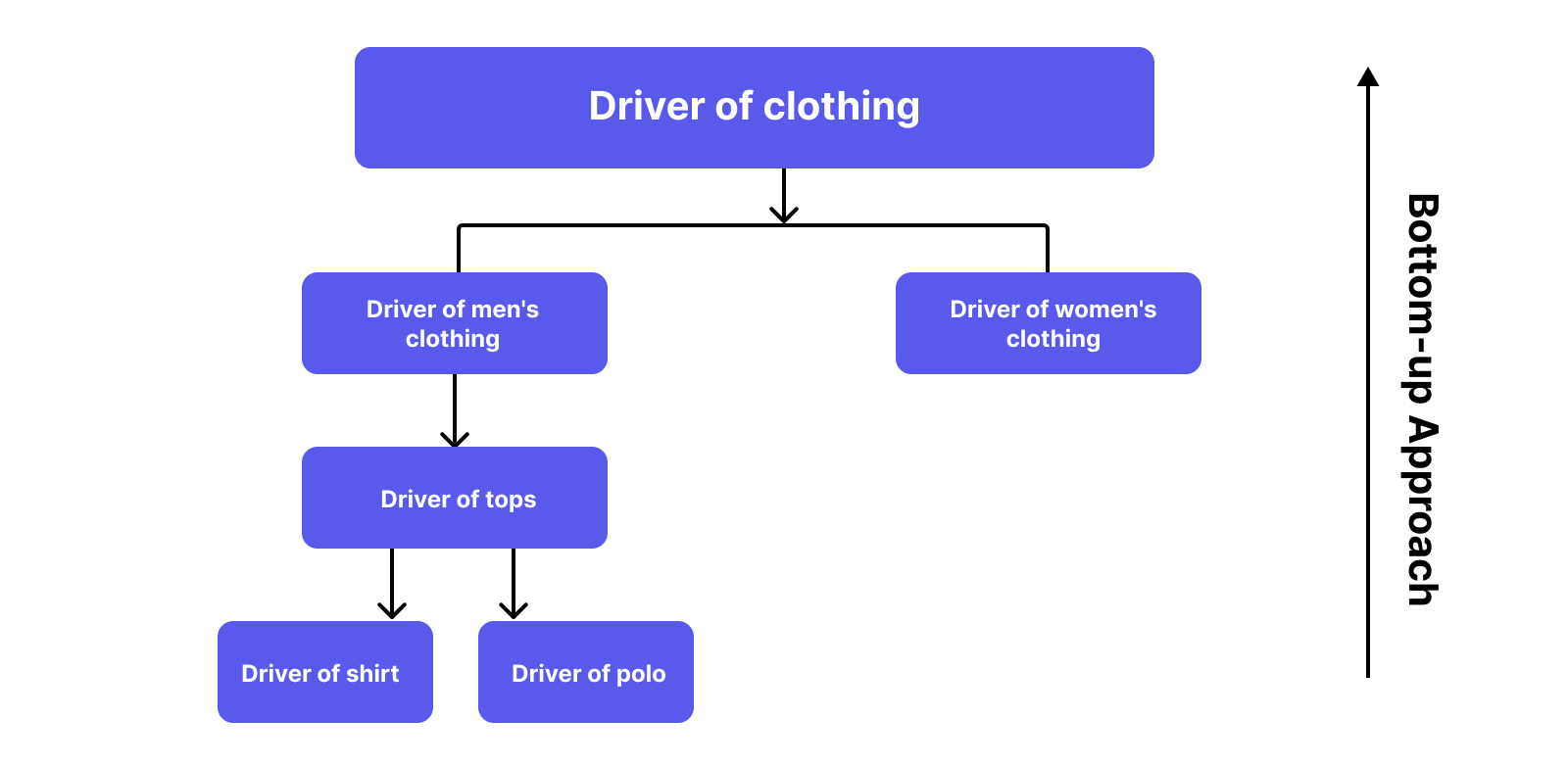

Bottom-up integration testing explained

With bottom-up integration testing, you start integrating modules at the lowest level, then gradually move to higher-level modules.

As shown in the diagram, the lower-level components represent specific items such as shirts and polos. These fall under the “Tops” category, which belongs to “Men’s clothing”, and ultimately “Clothing”.

With the bottom-up approach, you test from the most specific components toward the more generic, comprehensive modules.

Use bottom-up integration testing when:

- Most complexity lies in lower-level modules

- The team develops lower-level components first

- Bug localization is a priority and detailed isolation is required

- Higher-level modules are still being developed or likely to change

📚 Read More: Bottom-up Integration Testing: A Complete Guide

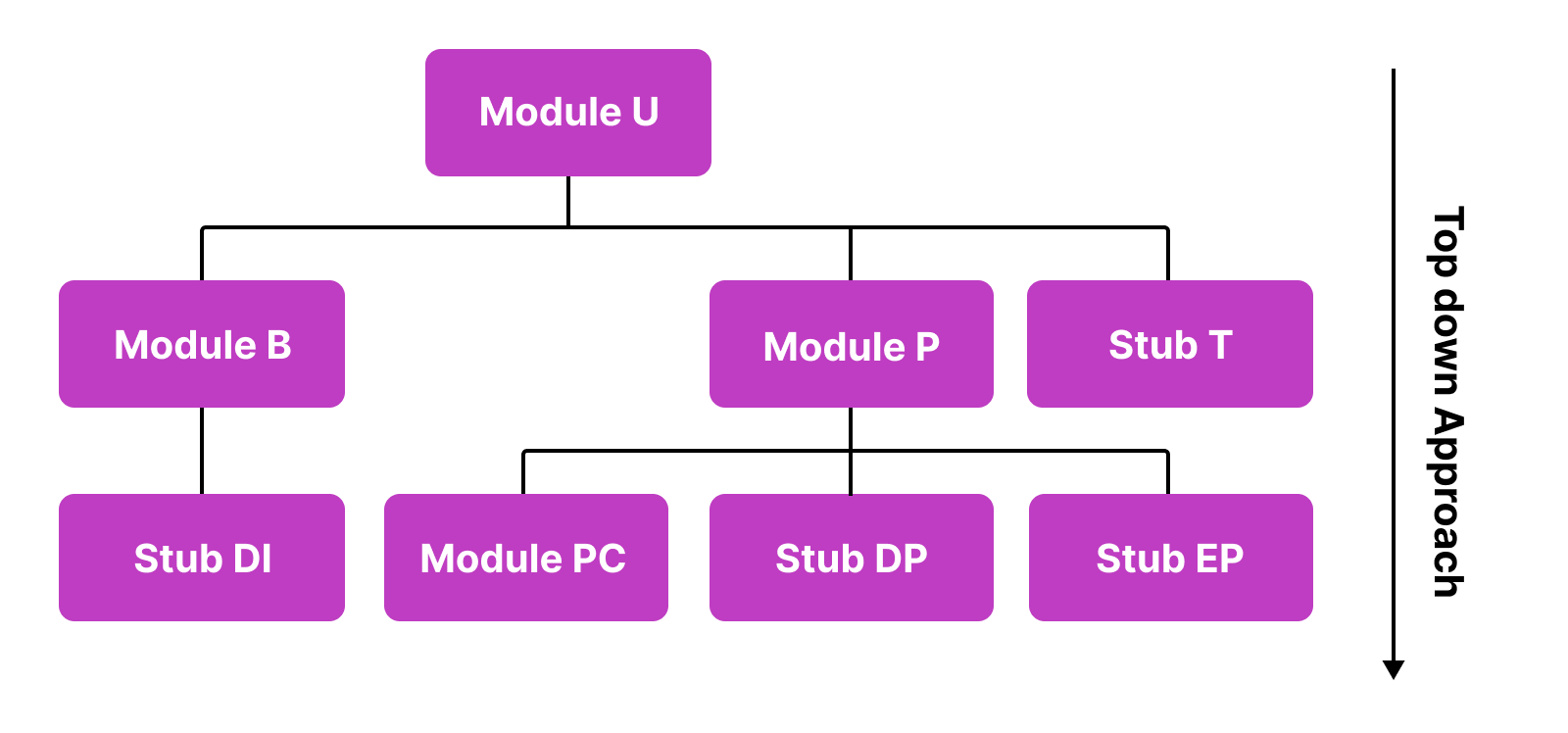

Top-down integration testing explained

With top-down integration testing, testers start with the highest-level modules and move downward toward lower-level modules.

Use top-down integration testing when:

- Most complexity is in higher-level modules

- You want to simulate real-world workflows and user journeys

- Lower-level modules are stable and unlikely to change

📚 Read More: Top-down Integration Testing: A Complete Guide

Hybrid integration testing explained

Hybrid Integration Testing (also known as Sandwich Testing) combines both top-down and bottom-up approaches, running them in parallel.

Advantages:

- Flexibility to tailor testing activities based on project needs

- More efficient use of team resources

- Simultaneous verification of both low-level and high-level components

Disadvantages:

- Requires strong communication to maintain consistency and issue tracking

- Switching between strategies can be difficult for some teams

Integration testing in the test pyramid

If you ever wanted to create a test strategy, the test pyramid is a great framework to follow.

The Test Pyramid is a widely used model that illustrates how different types of tests should be distributed within a testing strategy. The idea is to automate the bulk of tests at the lower layers (unit and integration tests) while keeping the more expensive end-to-end tests to a minimum.

The three layers of the test pyramid include:

- Unit Testing : The foundation of the pyramid. These tests validate individual components and are fast to write and execute, making them ideal for automation.

- Integration Testing: The middle layer, focused on verifying how components work together after they have been combined.

- E2E Testing : The top layer. These tests validate the system from the user's perspective. They are fewer in number because they are more complex, slower, and more brittle.

How to decide the ratio between unit testing, integration testing, and E2E testing?

If you follow the Test Pyramid strategy, a common ratio is roughly: 70–80% unit tests, 15–20% integration tests, and 5–10% end-to-end (E2E) tests.

The rationale is that:

- Unit tests are fast, easy to maintain, and catch bugs early. You typically want a large number of these tests to cover most of the codebase and ensure individual components work correctly. Around 80% coverage is both realistic and effective.

- Integration tests and E2E tests are more expensive and complex, so they are used sparingly, focused on critical interactions and user flows.

Although these ratios serve as a helpful guideline, you should adjust them based on your team size, system complexity, and available resources.

Integration testing vs End-to-end testing

Put simply:

- Integration testing checks the interactions between individual components.

- End-to-end testing checks the flow of an entire user journey.

The scope of end-to-end testing is bigger than that of integration testing.

Here is a simple comparison table of the differences between integration testing and end-to-end testing:

| End-to-End Testing | Integration Testing | |

| Purpose | Checks system behavior in real-world scenarios | Checks integration between components |

| Scope | Broader in scope and covers the entire technology stack | Focuses on interactions between components/modules |

| Testing stage | Performed at the end of the development lifecycle before releases | Executed after unit testing and before end-to-end testing |

| Technique | Black-box testing, often uses User Acceptance Testing (UAT) | White-box testing, often uses API testing |

📚 Read More: End-to-end testing vs Integration Testing

Unit testing vs integration testing: Key differences

| Aspect | Unit Testing | Integration Testing |

| Scope | Focuses on testing individual units of code (functions or methods). | Focuses on testing interactions between multiple units or modules. |

| Purpose | Verifies that each unit works as intended in isolation. | Verifies that different units or modules work together correctly. |

| Dependencies | Mocks or stubs external dependencies to isolate the unit. | Uses real dependencies to test actual integration behavior. |

| Granularity | Tests small, specific pieces of functionality. | Tests how multiple units collaborate. |

| Execution Environment | Runs easily in a development or CI environment. | Requires an environment where modules can run together. |

| Test Data | Uses small datasets or mocked data. | Often uses more realistic datasets for interaction testing. |

📚 Read More: Unit Testing vs Integration Testing: In-depth Comparison

Integration Testing Best Practices

- Ensure all modules have been unit tested before integration testing.

- Create a clear test plan defining scope, objectives, cases, and required resources.

- Automate repetitive or complex test cases to improve consistency and efficiency.

- Validate input test data to ensure reliable results.

- Run regression tests after each integration step to catch unintended side effects.

Katalon for integration testing

The Katalon Platform is an all-in-one solution for teams to plan, design, and manage test cases in a single place. It supports a wide range of application types, including API testing and UI Testing to cover all your quality needs.

1. Increased Test Coverage

UI testing is challenging due to the huge number of devices and browsers in use today. A layout may be perfect on one device but break completely on another. Katalon helps teams stay on budget with cloud-based cross-platform and cross-browser execution support.

2. Reduce Test Maintenance Effort

Katalon is designed to simplify test maintenance. When an application changes, testers must confirm whether existing scripts still work. With its page-object design model and centralized object repository, Katalon makes updates far easier.

By storing locators and artifacts in one place, teams can quickly adjust tests when UI elements or flows change.

|

FAQs on Integration Testing

What is Integration Testing?

Integration testing is a type of software testing where individual software modules or components are integrated and tested as a unified group to ensure they work seamlessly together and that data flows correctly between them.

What are the main approaches to performing Integration Testing?

The two most common approaches to integration testing are the Big Bang Approach and the Incremental Approach. The Big Bang approach tests all modules simultaneously as a single entity, while the Incremental approach tests modules in smaller, related groups.

How do Top-down and Bottom-up Integration Testing differ?

Top-down and Bottom-up are sub-approaches of Incremental Integration Testing. Top-down testing starts by integrating and testing high-level components first, then gradually moves to lower-level components. Conversely, Bottom-up testing begins with testing low-level components and progressively integrates them upwards to higher-level components.

What is the key difference between Integration Testing and Unit Testing?

Unit testing focuses on verifying the functionality of individual units or components of code in isolation, often using mocks or stubs for dependencies. Integration testing, on the other hand, focuses on verifying the interactions and data flow between multiple integrated units or modules, using real dependencies.

When should Integration Testing be performed in the software development lifecycle?

Integration testing is performed after unit testing is complete for all modules and before end-to-end or system testing. It is crucial to ensure that previously unit-tested modules function correctly when combined, helping to detect interface or interaction issues early in the development cycle.