Cross-Browser Testing Explained: What It Is, Why It Matters, and How to Do It Right

Learn with AI

Your web users comes in all shapes and sizes, and your web testing should totally accommodate this diversity. They can browse your website on:

- 9,000+ distinct devices

- 21 different operating systems (including older versions)

- 6 major browser engines (Blink, WebKit, Gecko, Trident, EdgeHTML, Chromium) powering thousands of browsers (Google Chrome, Mozilla Firefox, Apple Safari, Microsoft Edge, Opera, etc.)

Together, they create about 63,000+ possible browser - device - OS combinations that testers must consider when performing web testing.

This is why cross-browser testing is so crucial.

What is cross browser testing?

Cross-browser testing is a type of testing where testers assess the compatibility and functionality of a website across various browsers, operating systems, and their versions.

Cross-browser testing rose from the inherent differences in popular web browsers (such as Chrome, Firefox, or Safari) in terms of their rendering engines, HTML/CSS support, JavaScript interpretation, and performance characteristics, leading to inconsistencies in user experience.

The end goal of cross browser testing is to eliminate inconsistency and bring a standardized experience to users, no matter what browsers the users choose to access their website/web application.

Why is cross browser testing important?

There are many ways browsers can impact the web experience:

- Rendering differences: Browsers interpret HTML and CSS rules differently, leading to variations in the rendering of web pages. For instance, one browser can display a particular font or element slightly larger or smaller than another browser, causing misalignments or inconsistent layouts.

- JavaScript compatibility: Some browsers lack support for certain JavaScript APIs. Some functionalities may work flawlessly in one browser but encounter errors or fail to work as expected in another. Developers can uncover compatibility issues and implement workarounds or alternative approaches to ensure consistent behavior.

- Performance variations: Browsers differ in terms of performance, how they handle rendering, execute JavaScript, and manage memory. A website that performs well in one browser can experience slow loading times in another browser.

There are many potential issues that can occur without cross browser testing. Below are some examples of them, and it could be happening without you knowing:

- Dropdown menus fail to display correctly in certain browsers.

- Video or audio content does not play in specific browser versions.

- Hover effects or tooltips do not function as expected.

- The website layout appears distorted or broken on mobile devices.

- JavaScript animations or transitions do not work smoothly in some browsers.

- Page elements overlap or are misaligned in certain browser resolutions.

- Clicking on a button or link does not trigger the intended action in a specific browser.

- Background images fail to load or appear distorted in certain browsers.

- CSS gradients or shadows are rendered differently, affecting the visual appearance.

- Web fonts do not render correctly or show fallback fonts in specific browsers.

- Media queries or responsive design features do not adapt properly across different browsers.

- Web application functionalities, like drag-and-drop or file uploads, do not work in specific browsers.

All of these issues call for cross-browser testing.

If you’d like to dive deeper into real-world issues teams face, our Top Mobile & Cross-Browser Testing Challenges webinar shares practical lessons and solutions from the field.

What to test in cross browser testing?

The QA team needs to prepare the list of items they want to check in their cross browser compatibility testing.

- Base functionality: To verify if the core features of the website are still functioning as expected across browsers. Important features to add in the test plan include:

- Navigation: Test the navigation menu, links, and buttons to ensure they lead to the correct pages and sections of the website.

- Forms and Inputs: Test the validation of forms, input fields, submission, or error handling

- Search functionality: Test if the Search feature returns expected results

- User registration and login: Test the account registration process happens with no friction, and if the account verification emails are sent to the right place.

- Web-specific Functionalities: Test if web-specific features (such as eCommerce product features or SaaS features) function as expected

- Third-party integrations: Test the functionality and data exchange between the website and third-party services or APIs.

- Design: To verify if the visual aspect of the website is consistent across browsers. Testers usually leverage visual testing tools and the pixel-based comparison approach to identify discrepancies in layouts, fonts, and other visual elements on many browsers

- Accessibility: To verify if the website’s assistive technology is friendly with physically challenged users. Accessibility testing involves examining if the web elements can be accessed via the keyboard solely, if the color contrast is acceptable, if the alt text is fully available, etc.

- Responsiveness: to verify if the screen resolution affects layout. With the mobile-first update, the need for responsive design is more important than ever since it is among major Google ranking factors.

When to do cross browser testing?

Cross-browser testing should be done:

- Before launch: To ensure the website or application works seamlessly across popular browsers and devices.

- After major updates: To verify that new features or changes don’t break compatibility.

- During regular maintenance: To catch any issues caused by browser updates or changes in user behavior.

- When targeting a new audience: To ensure compatibility with browsers or devices popular in a new market.

How to do cross browser testing?

The cross browser testing and bug fixing workflow for a project can be roughly divided into 6 following phases (which is in fact the STLC that can be applied to any type of testing):

- Requirement Analysis

- Test Planning

- Environment Setup

- Test Case Development

- Test Execution

- Test Cycle Closure

1. Plan for cross browser testing

During the planning phase, discussions with the client or business analyst help define what needs to be tested. A test plan outlines the testing requirements, available resources, and schedules. While testing the entire application on all browsers is ideal, time and cost limitations make it more practical to test 100% of the application on one major browser and focus only on critical features for other browsers.

Next, analyze the target audience's browsing habits, devices, and other factors. Use data from client analytics, competitor statistics, or geographic trends to determine key testing platforms.

For example, an eCommerce site targeting North American customers might:

- Work seamlessly on the latest versions of Chrome, Firefox, Edge, Safari, and Opera.

- Provide basic functionality for older browsers like IE 8 and 9.

- Comply with WCAG AA accessibility standards.

Once testing platforms are identified, revisit the feature requirements and technology choices in the test plan.

2. Choose between manual testing and automated testing

Manual testing involves testers accessing websites through various browsers and manually performing test cases to identify bugs. While straightforward, it is time-consuming, prone to errors, and not scalable for repetitive tasks.

Automated testing uses tools to create and run test cases, improving efficiency, accuracy, and consistency. Teams can either build an in-house tool or buy one from a vendor. A good cross-browser testing tool should:

- Offer a VPN for remote access to machines and performance testing.

- Provide screenshots to review how pages appear across browsers.

- Support a wide range of browsers, versions, and screen resolutions.

- Support web and mobile applications, including private and local pages.

Automate repetitive tests and use manual testing for ad-hoc, exploratory, and usability tests.

3. Set up the test environment

Setting up a test environment is challenging and expensive if using physical machines. Testers would need various devices (Windows PC, Mac, Linux, iPhone, Android devices, tablets) along with older versions of these systems. Managing test cases and results across such devices is difficult without a centralized system.

Common solutions include:

- Emulators, Simulators, or Virtual Machines (VMs): These simulate the systems needed for testing, reducing physical costs. However, they may lack reliability, especially for mobile platforms.

- Remote Access Services: Testers can access real devices remotely to perform tests without physical ownership, offering control and customization.

- Cloud-Based Testing Platforms: These provide ready-to-use combinations of browsers and operating systems, automation tools, collaboration features, and managed infrastructure, allowing testers to focus on critical tasks instead of maintenance.

4. Develop test cases

For manual testing, testers can use AI-powered test case generation in Katalon with JIRA integration. With ChatGPT, creating well-structured and accurate test cases becomes easier through natural language inputs, saving time and reducing manual effort.

After integrating Katalon with JIRA, installing the "Katalon - Test Automation for JIRA" plugin, and setting up the API key, a "Katalon manual test cases" button will appear in JIRA tickets. Clicking this button allows the Katalon Manual Test Case Generator to analyze the ticket title and description, then automatically create detailed manual test cases for you.

As you can see below, Katalon has generated 10 manual test cases for you within seconds, and you can easily save these test cases to the Katalon Test Management system, which helps you track their status in real-time.

For automation testing, you can leverage the Built-in Keywords and Record-and-Playback. The Built-in keywords are essentially prewritten code snippets that you can drag and drop to structure a full test case without having to write any code, while the Record-and-Playback simply records the actions on your screen and then turns those actions into an automated test script which you can re-execute on any browsers or devices you want.

5. Execute the tests

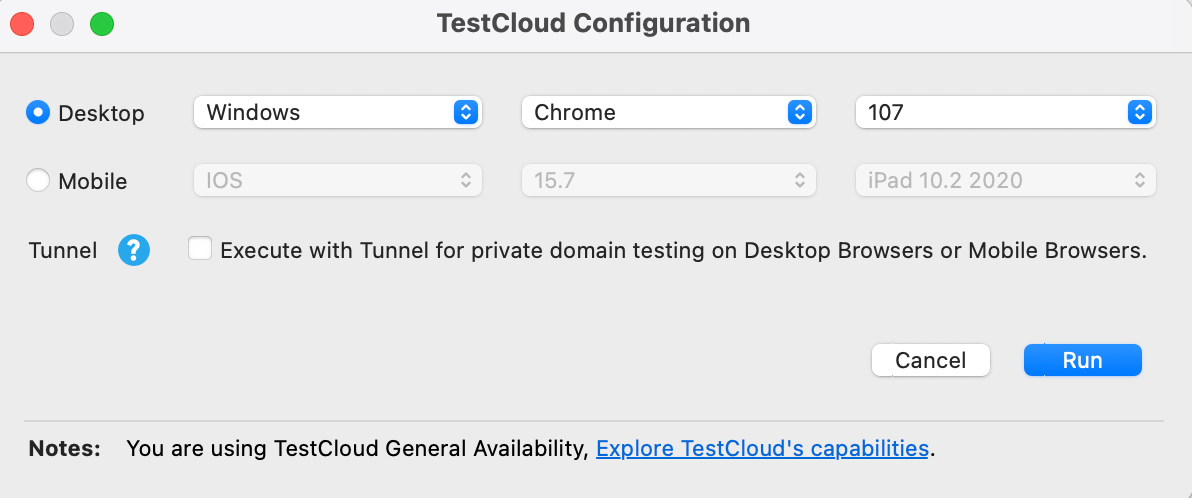

If testers go for manual testing, they can simply open the browser and run the tests they have planned out, then record the results manually. If they choose automation testing, they can configure the environment they want to execute on then run the tests. In Katalon TestCloud, after constructing a test script, testers can easily select the specific combination they want to run the tests on.

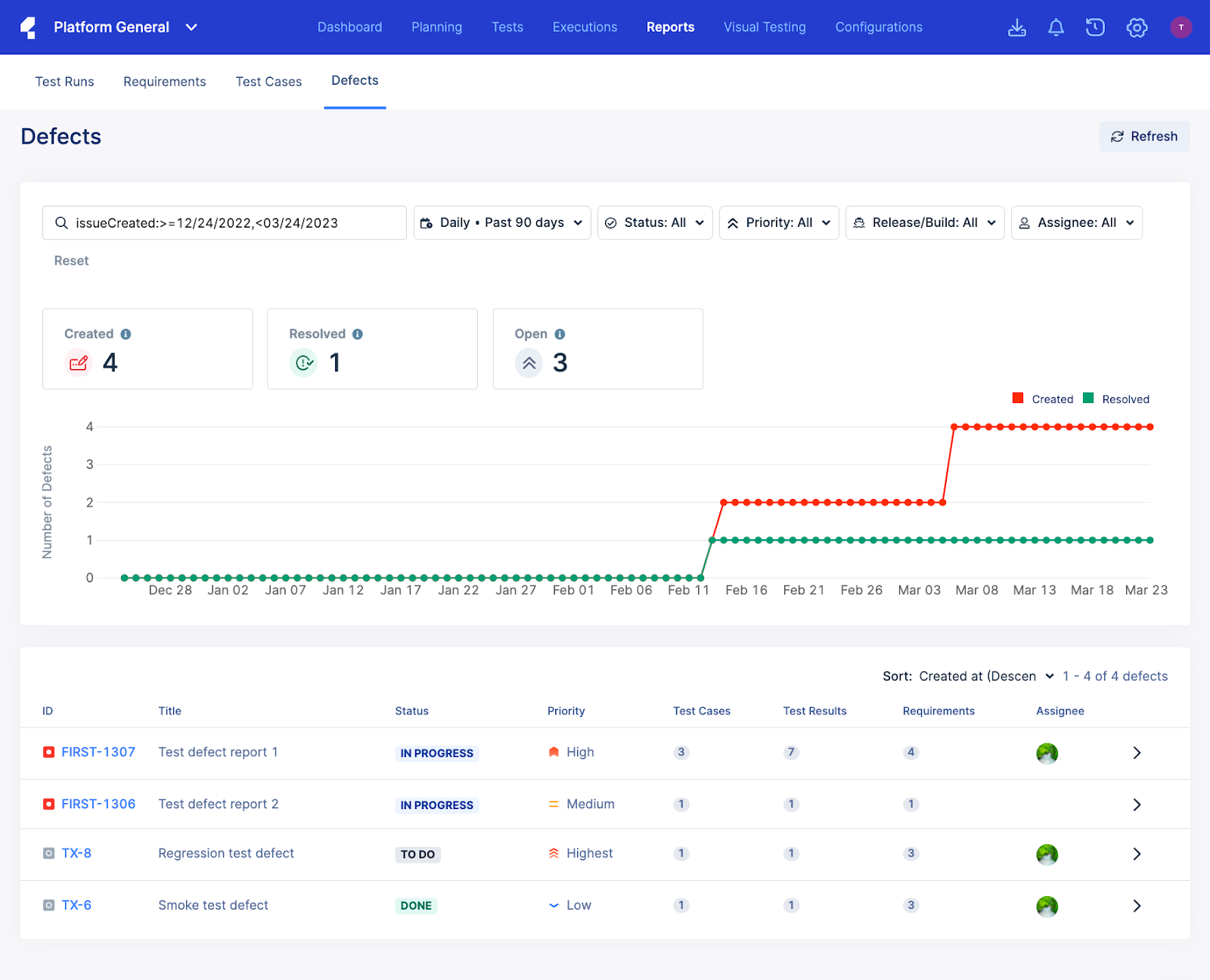

6. Report the defects and document results

Finally, testers return the results for the development and design team to start troubleshooting. After the development team has fixed the bug, the testing team must re-execute their tests to confirm that the bug has indeed been fixed. These results should be carefully documented for future reference and analysis.

FAQs

1. How can I decide which browsers to include in cross-browser testing?

Analyze your audience’s browsing habits using tools like Google Analytics or similar traffic analysis platforms. Focus on the most commonly used browsers, devices, and versions for your target market. Include a mix of modern browsers and older ones if a significant portion of your audience still uses them.

2. Can cross-browser testing help improve website accessibility?

Yes, cross-browser testing can identify issues related to accessibility features, such as screen readers or keyboard navigation, across different browsers. This ensures your website complies with accessibility standards like WCAG and provides an inclusive user experience.

3. What’s the best approach to testing legacy browsers?

For legacy browsers, focus on testing critical features rather than the entire application. Use tools like BrowserStack or Sauce Labs to simulate older browser environments and ensure basic functionality, especially for applications targeting regions with slower tech adoption.

4. How do responsive design and cross-browser testing relate?

Responsive design ensures that a website adapts to different screen sizes, while cross-browser testing ensures it works well across different browsers. Both go hand in hand to deliver a consistent user experience on a variety of devices and platforms.

5. How do I manage testing for different browser versions efficiently?

Use cloud-based platforms like LambdaTest or BrowserStack that provide pre-configured environments for multiple browser versions. Automate repetitive test cases for older versions while manually testing unique or complex features.

6. Are there specific tools for cross-browser testing on mobile devices?

Yes, tools like Appium, BrowserStack, and Sauce Labs are specifically designed to test mobile browsers. These tools allow you to simulate a variety of devices, operating systems, and browsers for comprehensive testing.

7. How do browser updates affect cross-browser testing?

Frequent browser updates can introduce new features or deprecate older ones, potentially breaking your application. To handle this, schedule periodic tests to check compatibility with the latest versions and maintain awareness of browser release schedules.

8. Can cross-browser testing detect performance issues?

Yes, some tools like Lighthouse or Katalon integrate performance testing into cross-browser tests. They help identify rendering delays, slow-loading elements, and browser-specific bottlenecks that affect user experience.

|