The Pesticide Paradox in Software Testing

Learn with AI

There’s an interesting term in agriculture called the pesticide paradox. When a pesky insect infestation is threatening a farmer’s hard-earned harvest, he sprays the crop with pesticide, killing (most of) them in an instant. What a glorious win of man against nature! But there’s a catch: the tiny percentage of those insects which fortunately survive will develop a natural resistance to the pesticide.

Now that they have less competition, they thrive, reproduce, and pass on their (stronger) genes to the next generation. The more pesticide the farmer sprays, the more those insects adapt and become harder to control over time. Eventually, the pesticide becomes ineffective, and the farmer has to resort to stronger chemicals that result in severe environmental damage.

The same pesticide paradox happens in software testing.

What is the Pesticide Paradox in Software Testing?

The Pesticide Paradox in software testing was first introduced by Boris Beizer, a notable figure in the field of software engineering, in his book "Software Testing Techniques", published in the 1980s.

The Pesticide Paradox in software testing was first introduced by Boris Beizer, a notable figure in the field of software engineering, in his book "Software Testing Techniques", published in the 1980s.

The idea is that repeating the same test cases over time results in the software "adapting," where defects become less likely to be detected because the tests no longer cover new or evolving areas of the application. As software is updated—through new features, bug fixes, or refactoring—test cases that were once effective may no longer catch newly introduced bugs.

Causes of the Pesticide Paradox

The pesticide paradox gained traction in the testing community as software complexity grew, particularly during the rise of automated testing in the late 1990s and 2000s. When test automation became more common, QA teams noticed that running the same automated tests repeatedly did not always uncover new defects.

There are 2 primary reasons for this:

1. Automation test scripts don’t catch new bugs, they check for bugs.

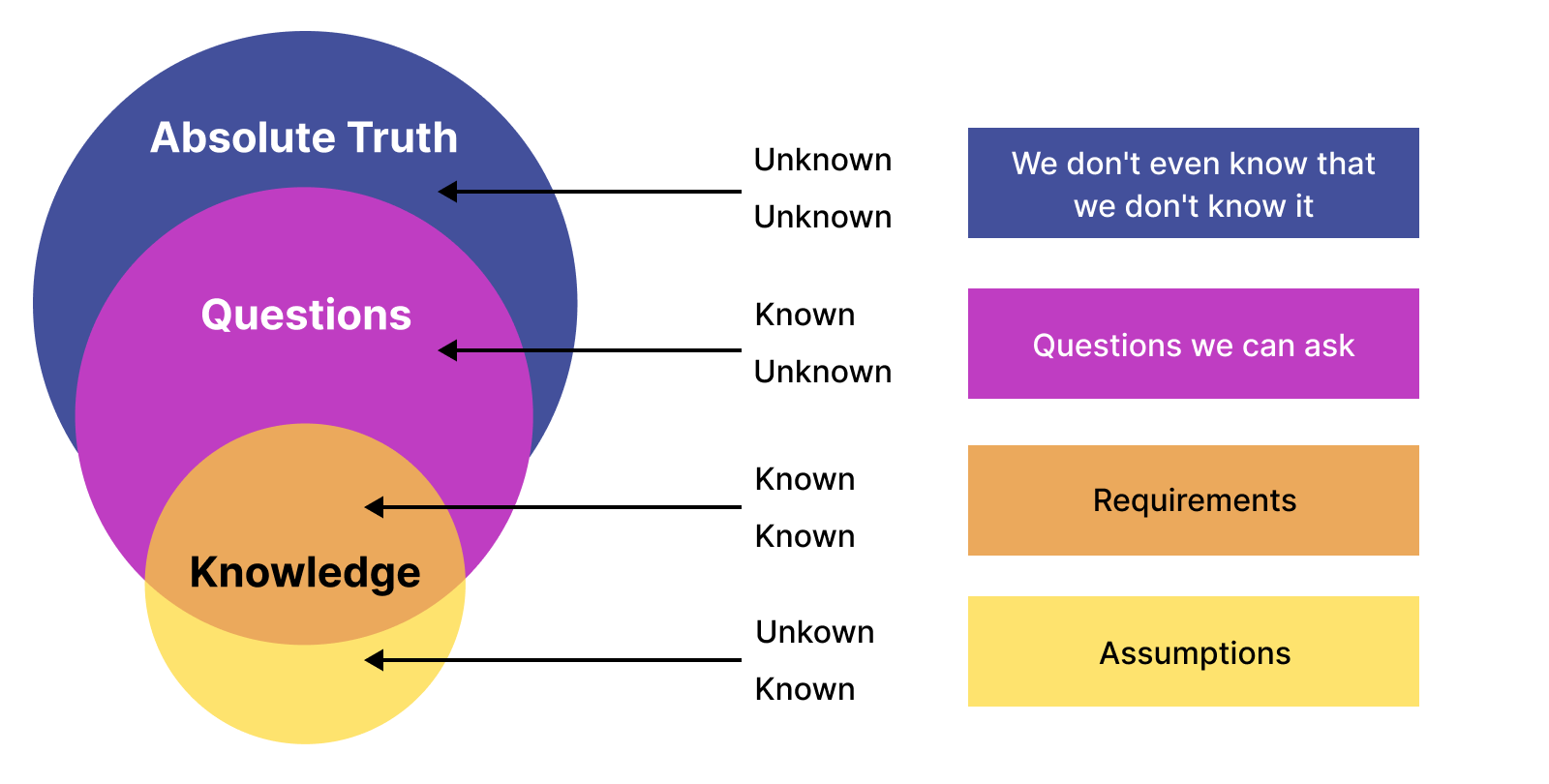

Let’s look at this Venn diagram below to have a better understanding.

Old and existing bugs fall within the Assumptions/Requirements/Questions section of our awareness. We know that there can very well be a bug in that specific software module because we have seen it before.

However, the real bugs lie in the Unknown Unknown domain: we don’t even know that we don’t know about their existence. Automated scripts are only designed to test specific scenarios, conditions, or inputs that the developers can foresee. If you are not aware of their existence, how can you write a test script for it?

Imagine you are testing The Sims 4 game. You know that a bug will occur at the character customization stage if the player inputs an unrealistically long username, so you write an automated test script for it.

But what if a bug happens when the user simultaneously tries to switch between worlds and save the game while a household is experiencing a fire? These are the edge cases that don’t happen that frequently, but when it happens, it usually brings disaster.

The point is: automation test cases (the pesticide) do wonders at checking known bugs, but when it comes to the less explored parts of the application, we need some manual testing to complement the automation testing blind spots.

2. A system-under-test continuously evolves, but a test case is static.

Even well-designed test cases can become less effective over time if they don’t change. In today’s highly dynamic, fast-paced, and ever-changing tech landscape, staying the same is just asking for obsolescence.

This makes sense, since software must continuously grow with new features added, while an automated test case is only created only for a specific codebase at a specific point in time. When that codebase (i.e. the code that causes the bug) changes, the test script (i.e. the pesticide) must be updated to reflect the changes.

Strategies To Overcome The Pesticide Paradox

1. Have a fine balance between automation testing and manual testing

Automation testing is awesome with all of the benefits it can bring. However, it is unrealistic to rely solely on automation testing. Do not put all your eggs in one basket. When you adopt a hybrid approach of automation testing and manual testing, you get the best of both worlds.

How to do that? Use manual exploratory testing to discover new bugs. These are the more elusive bugs that usually take 3+ interactions with the system to trigger. It is in the next sprint/release that you write automated scripts for those bugs.

2. Regular test suite update for relevance

Over time, when new functionalities are added, UI is redesigned, and old features are removed, test cases must be updated accordingly. Running obsolete tests wastes resources without adding any extra value, so don’t reuse the same tests over and over. Instead, update them regularly.

After each release cycle, it is a recommended practice to step back and identify redundant tests. If a test no longer serves a purpose (e.g. tests covering deprecated features), it should be removed. Similarly, if a feature is updated, the tests covering that feature should also be checked to ensure that they still align with the current software behavior.

3. Incorporate CI/CD

In a CI/CD pipeline, every code change, whether it's a new feature, bug fix, or refactor, is integrated into the main codebase regularly, often several times a day. Thanks to this, outdated tests are flagged when they fail due to recent code changes, pushing the team to update or replace them.

A well-configured CI/CD pipeline can also automatically run regression tests on every build. This ensures that new code does not break existing functionality. Such an instant feedback loop keeps your test suites dynamic and relevant.

4. Make your test design modular

You need a foundation to make test maintenance easier. Enter modular test design.

Modular test design means breaking your software down into smaller, reusable units and create separate test cases for each of those units. It promotes reusability, scalability, and maintainability because:

- When application changes occur (e.g., button renaming or form structure changes), only a small section of the test needs to be updated.

- Modular functions like login processes or form filling can be reused across multiple test cases.

- Adding new tests is easier as you can combine existing components to cover different scenarios without writing new tests from scratch.

Let’s look at an example of how that is done in Katalon Studio. In Katalon, you have an Object Repository that stores all of the test objects that you can reuse across test environments. You can perform a Record-and-Playback by performing the tests as you would manually, and Katalon helps you turn all of your steps into a full executable test script that you can run on the environment of your choice.

|

Pesticide Paradox FAQs

What is the Pesticide Paradox in software testing?

It’s the phenomenon where running the same test cases repeatedly stops revealing new defects. As the app evolves, those fixed tests no longer exercise newly introduced or less-explored areas, so bugs slip by.

Why does it happen?

Two main reasons:

-

Automation checks knowns: Scripts verify scenarios we already anticipate, not “unknown unknowns,” so novel bugs go untested.

-

Apps change, tests don’t: The system keeps evolving (features, refactors, UI), but static test cases fall out of sync and lose effectiveness.

What are signs our suite is hitting the paradox?

Fewer new bugs found despite frequent releases, rising escapes to later test levels/production, many passing tests that no longer map to current behavior, and flakiness or obsolescence in parts of the suite.

How do we counter it?

-

Blend manual + automation: Use exploratory testing to uncover new defects, then automate the valuable ones.

-

Continuously refresh suites: After each release, prune redundant/deprecated cases and update tests for changed features.

-

Embed CI/CD: Run regressions on every build to surface outdated tests quickly and force timely updates.

-

Design modular tests: Reuse small, maintainable components so updates are localized and adding coverage is easy.

How can Katalon help with this?

Katalon Studio supports modular, maintainable testing via an Object Repository, Record-and-Playback that converts exploratory flows into scripts, and easy reuse of components. Combined, these features make it faster to update, refactor, and expand tests as the application changes—keeping the suite effective over time.